AI Chip Shakeup: NVIDIA Battles U.S. Rules, Intel’s Bold Bet, and Nebius Scores Mega Microsoft Deal

Welcome, AI & Semiconductor Investors,

Could a new wave of "U.S.-first" regulations rewrite the global AI chip market and put NVIDIA's soaring trajectory at risk? This week, the GAIN AI Act threatens to prioritize domestic chip demand, squeezing NVIDIA’s international TAM and boosting American cloud giants. Meanwhile, Intel strategically locks in billions in U.S. government equity and SoftBank cash to supercharge its foundry push, just as Nebius clinches a nearly $20B AI infrastructure partnership with Microsoft, accelerating NVIDIA-powered data centers amid fierce power and capacity battles. Investors — Let’s Chip In.

What The Chip Happened?

🇺🇸 GAIN AI Act: “U.S.-first” chip rules could rewrite the AI supply chain

🧭 Intel Locks In U.S. Equity Backing, SoftBank Chips In $2B — Zinsner Maps Path to 40%+ Margins & 18A Ramp

🚀 Nebius lands Microsoft in a nearly $20B AI‑compute pact

[Ambiq Q2’25: 13.6% QoQ Growth, IPO Fuels Edge AI Push]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

NVIDIA (NASDAQ: NVDA)

🇺🇸 GAIN AI Act: “U.S.-first” chip rules could rewrite the AI supply chain

What The Chip: On August 1, 2025, Sen. Jim Banks (R‑IN) filed SA 3505 — the “Guaranteeing Access and Innovation for National Artificial Intelligence (GAIN AI) Act of 2025”— as an amendment to the FY2026 NDAA. It would force a global export‑license regime for “advanced integrated circuits” and add a right‑of‑first‑refusal for U.S. buyers on shipments to “countries of concern.” The Senate aims to complete its NDAA by September 8, 2025.

Details:

📜 What the amendment actually says. SA 3505 adds a new section to the Export Control Reform Act requiring:

(i) a license for any export, re‑export, or in‑country transfer of an “advanced integrated circuit” or products containing one (global in scope), and

(ii) a U.S.-priority certification (right‑of‑first‑refusal, no domestic backlog, no better foreign terms) when the destination is a “country of concern.” It also states a Sense of Congress to **deny licenses for the most powerful chips (≥ total processing power 4,800) and restrict exports while U.S. entities are waiting for the same chips.

🔢 Where “advanced IC” starts (simple terms). The bill tracks BIS performance metrics. Chips are “advanced” if they meet thresholds like TPP ≥ 2,400 with PD ≥ 1.6, or TPP ≥ 1,600 with PD ≥ 3.2, or have DRAM BW ≥ 1,400 GB/s, interconnect ≥ 1,100 GB/s, or (DRAM + interconnect) ≥ 1,700 GB/s. TPP is a standardized compute score; performance density (PD) is compute per square millimeter of die—a proxy for how much “AI horsepower” is packed into the chip.

🧭 Important nuance. The license requirement applies worldwide, but the right‑of‑first‑refusal certification applies only to exports to countries of concern (arms‑embargoed countries or those hosting, or intending to host, facilities tied to them). For allies (UK, Japan, South Korea, EU), the real‑world friction will depend on Commerce/BIS licensing policy and exceptions.

🏛️ Where it sits in Congress.

• Senate: SA 3505 is filed to S.2296 (FY2026 NDAA); Senate leaders want to wrap their bill this week. Whether GAIN appears in the manager’s package, gets a standalone vote, or gets dropped is the key near‑term catalyst. Congress.govRoll Call

• House: A parallel right‑of‑first‑refusal concept appears as Amendment #900 (Version 5) to H.R. 3838 in the House Rules list—evidence the idea is in play on both sides of the Capitol.

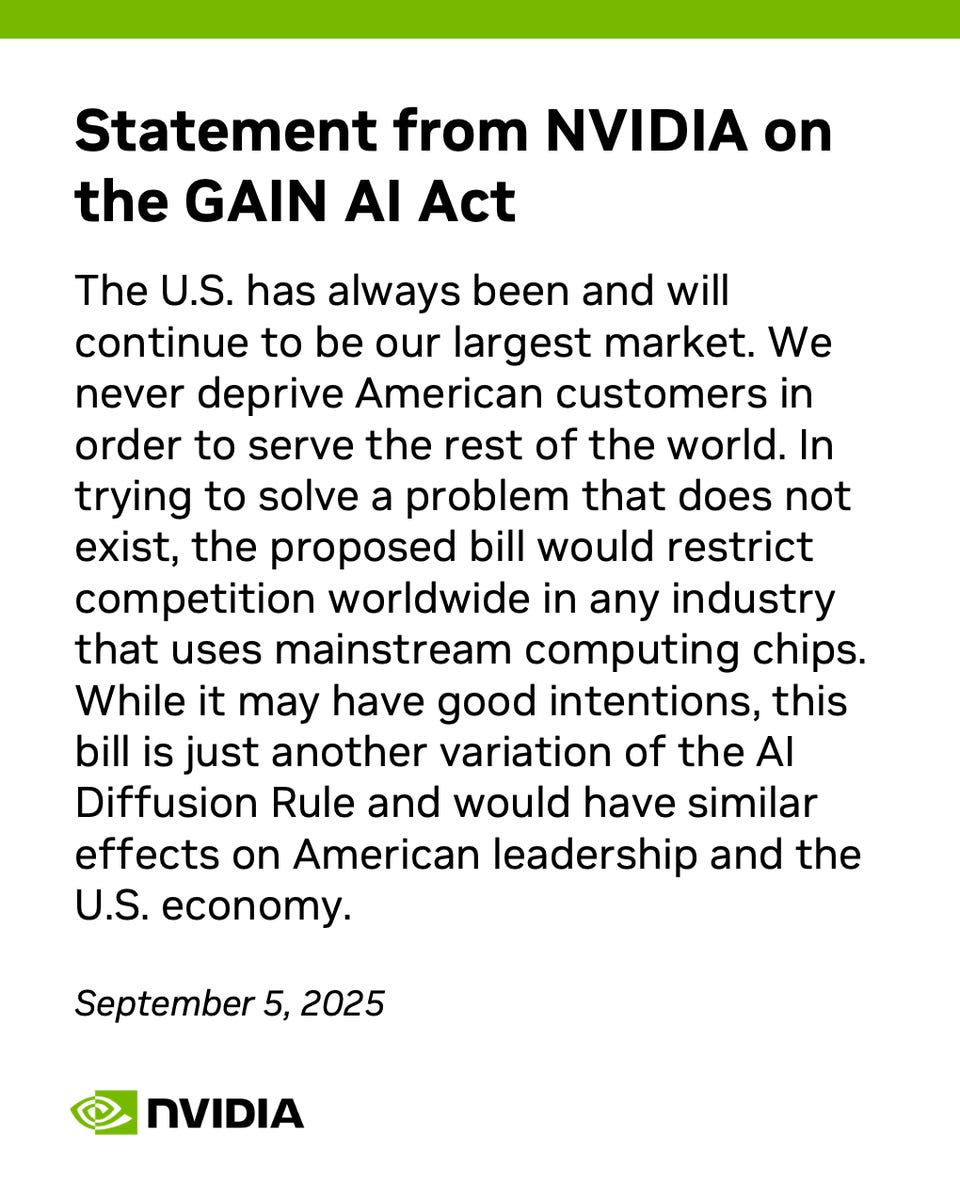

🗣️ What industry is saying. NVIDIA warned the GAIN Act “would restrict competition worldwide” and likened it to the earlier AI Diffusion framework. A spokesperson added, “We never deprive American customers in order to serve the rest of the world… [the bill] solves a problem that does not exist.” NVIDIA’s pushback underscores the risk of concentrating demand in a few U.S. hyperscalers.

🧩 How it fits with existing rules. The bill sits on top of BIS’s January 2025 interim final rule tightening advanced computing controls globally (e.g., performance‑tiered thresholds and added due‑diligence). Expect BIS rulemaking to implement whatever survives conference—watch the Federal Register and BIS press pages for compliance dates and any ally exceptions.

✅ Potential beneficiaries (if enacted).

• U.S. AI startups & university labs—less likely to be back‑ordered behind offshore buyers.

• U.S. cloud providers (AWS, Azure, Google Cloud)—more reliable access to frontier GPUs to monetize enterprise AI demand at home and in their allied-region data centers. (Investor framing, based on bill mechanics; final impact depends on Commerce licensing and supplier allocations.)

⚠️ Risks and second‑order effects (esp. for NVDA).

• TAM headwind abroad: Prioritizing domestic orders risks slowing non‑U.S. AI build‑outs that fuel global demand growth.

• Customer concentration & pricing power: More leverage accrues to a handful of U.S. hyperscalers already developing custom silicon (e.g., AWS Trainium), which can pressure Nvidia’s margins and bargaining power over time.

• Allied friction: Even if certification targets only “countries of concern,” a global license requirement still adds paperwork and potential delays for allies—Commerce’s implementation will be decisive.

📅 Investor timeline & tripwires.

Now → mid‑September (Senate floor): Track whether SA 3505 gets a vote, is folded into the manager’s substitute, or is dropped.

Mid‑ to late‑September (House floor): Watch if the House adopts its own RFR language (e.g., Amend. #900) or punts.

October–November (Conference): Highest‑risk period for changes—many provisions die or get watered down. (Historical NDAA pattern.)

December (Final passage): NDAAs typically clear in December; signature makes it law; then BIS begins rulemaking and compliance clocks start.

Why AI/Semiconductor Investors Should Care

If GAIN’s U.S.-first priority survives, near‑term winners likely include domestic AI startups, labs, and U.S. clouds that gain steadier access to frontier GPUs, supporting revenue capture for hyperscale platforms. For NVIDIA, the trade‑off is real: the policy could protect U.S. demand in the short run yet cap international TAM, increase buyer concentration, and accelerate custom‑chip adoption—all pressures that can compress long‑run growth and pricing. The decisive variable is BIS implementation (exceptions, license timelines, and how allies are treated); that will determine whether GAIN boosts domestic availability without unintended global drag on NVDA’s multi‑year narrative.

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Intel (NASDAQ: INTC)

🧭 Intel Locks In U.S. Equity Backing, SoftBank Chips In $2B — Zinsner Maps Path to 40%+ Margins & 18A Ramp

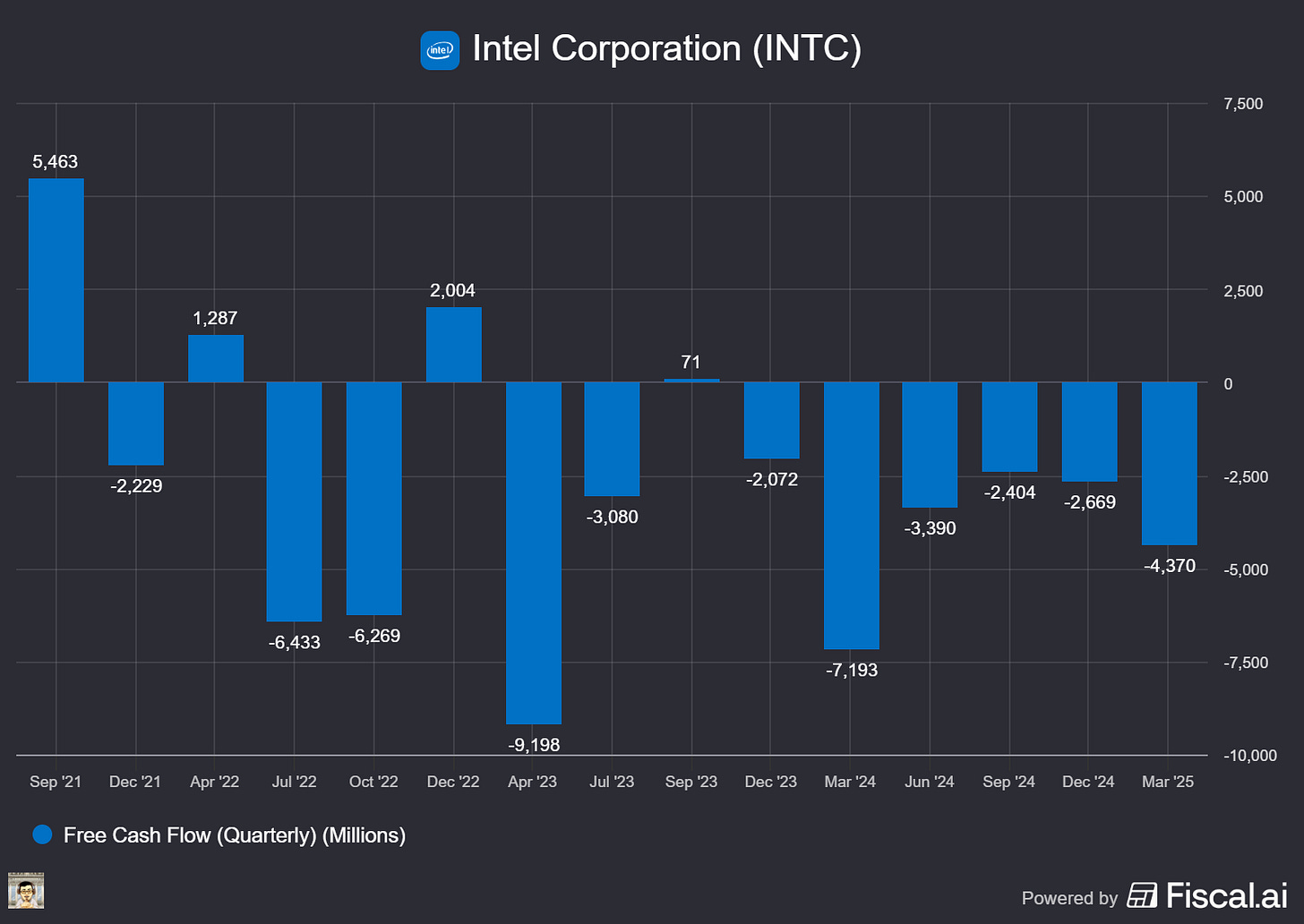

What The Chip: On September 4, 2025, CFO David Zinsner told Citi’s Global Tech audience that Intel swapped uncertain U.S. grant funding for a direct equity stake by the government, securing $5.7B (plus $3B+ tied to “Secure Enclave”) and expects $2B from SoftBank this quarter—fueling deleveraging as Intel leans on its 18A node and lines up customer demand for 14A.

Details:

💸 U.S. becomes a “silent” shareholder. Intel replaced uncertain grants (and clawbacks) with government equity: “a great deal for Intel… and a great deal for the American people,” Zinsner said. No board control; voting generally follows Intel’s board. The government also received warrants that only trigger if Intel sells >50% of the foundry business—Intel intends to retain ≥51% to avoid dilution.

🏦 Balance sheet reset via cash inflows. This quarter includes ~$1B from Mobileye share sales, an Altera transaction adding $3.5B in the “next few weeks,” $2B from SoftBank pending regulatory filings, and $5.7B from the U.S. last week. Intel plans to let $3.8B of 2025 maturities roll off without refinancing to delever and preserve liquidity.

🤝 Why SoftBank? CEO Lip‑Bu Tan (Intel) and Masayoshi “Masa” Son (SoftBank) have a decades‑long relationship. SoftBank wants exposure to AI; Intel Foundry is a lever. Zinsner: SoftBank “saw an opportunity… at an attractive valuation,” with the $2B expected by quarter‑end, subject to filings.

🧭 Foundry structure invites future partners (carefully). Intel carved out foundry as a subsidiary, enabling outside capital later. Don’t expect near‑term deals (“not quite investable yet”); any stake sales would stay below 49% to preserve Intel control and avoid warrant triggers.

🧪 18A = workhorse through ~2030. Yields and performance are “stabilized.” Panther Lake’s first SKU ships by year‑end 2025, with more SKUs ramping in 2026; Clearwater Forest and Diamond Rapids (server) also ride 18A. Plain English: 18A is Intel’s current leading‑edge process (≈2‑nm‑class) aimed at better performance per watt and cost.

🧮 14A economics need external customers. 14A is more expensive than 18A due to high‑NA EUV and more steps; early internal volume will stay on 18A, so external wafer demand must fill 14A’s fabs. Intel expects a read in 2026 on traction; Intel products on 14A target ~2028–2029. Plain English: 14A adds backside power (power delivered from the rear of the chip) for higher speed/efficiency, but it costs more—so customers must sign up to make returns work.

🌏 TSMC remains a strategic flex. Today Intel’s wafer mix is ~70% internal / 30% external. Lunar Lake is all TSMC; Arrow Lake is mostly outsourced. Over time the 30% should “come down,” but external foundry remains a Smart Capital lever to manage demand volatility and capex.

🧩 Operating model + margins. Intel split Products and Foundry into separate P&Ls (behavior already changing), with distinct ERP systems by end of 2027. Lip‑Bu slashed management layers from ~11 to ~6 to speed decisions and cut bureaucracy. Near‑term goal: lift gross margin from the 30s to the 40s, aided by 18A mix/cost, Panther Lake architecture vs. Lunar Lake, and tighter cost control. Zinsner: “The biggest thing… is get products out that are really competitive.”

Why AI/Semiconductor Investors Should Care

Intel just de‑risked funding, strengthened liquidity, and clarified governance—all while aligning the business around 18A as the revenue/margin engine into ~2030 and 14A as the next step only if customers show up. The setup is binary on 14A (customer wins vs. spend discipline), but near‑term catalysts—Panther Lake by year‑end 2025, the SoftBank $2B close, Altera proceeds, and visible margin progress toward the 40s—offer tangible proof points to track. If Intel converts foundry engagements into 14A tape‑outs while defending client CPU share and sharpening data‑center designs, the operating leverage could be meaningful; if not, elevated process costs and reliance on TSMC for key client parts remain the watch‑outs.

Nebius Group (NASDAQ: NBIS)

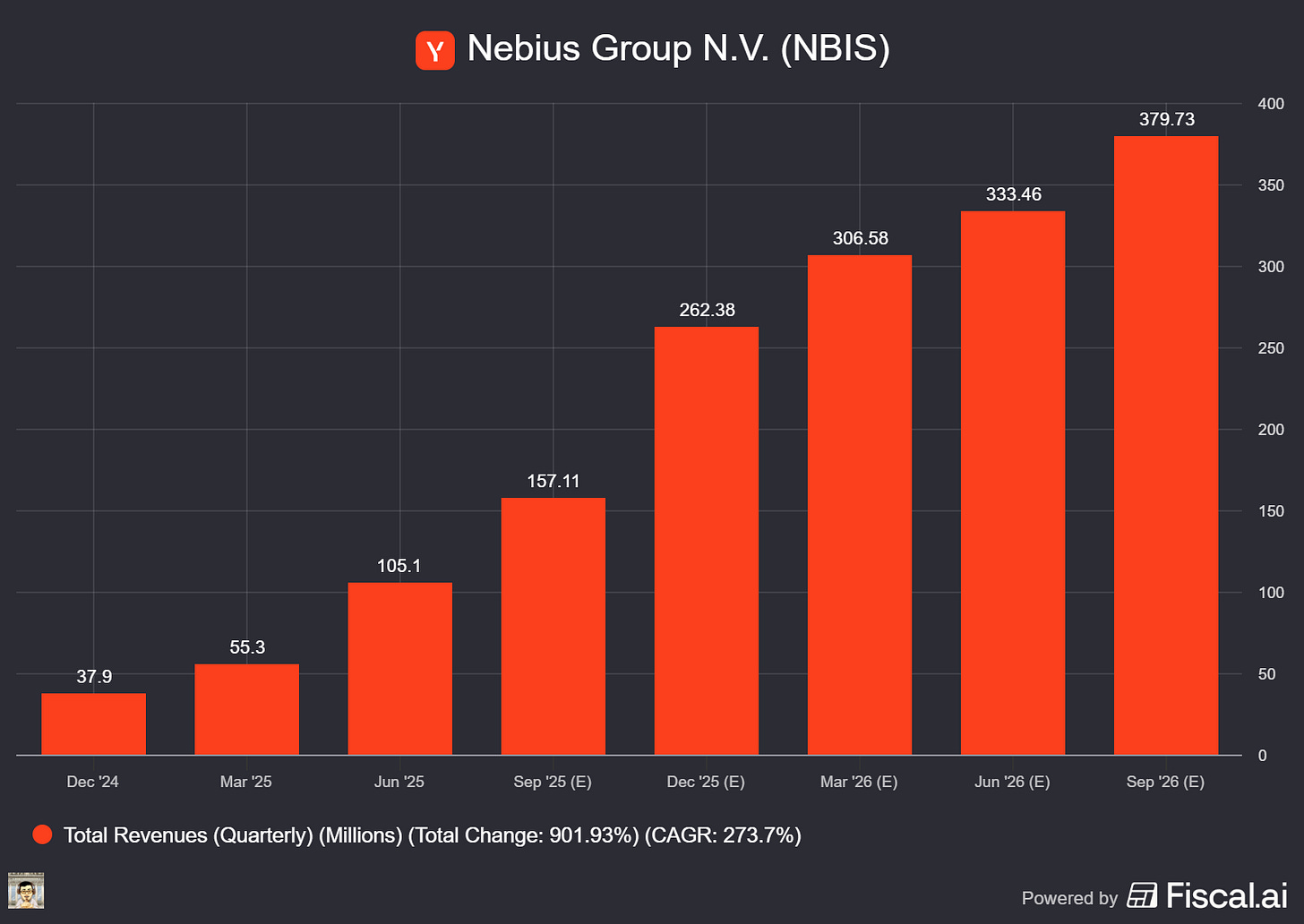

🚀 Nebius lands Microsoft in a nearly $20B AI‑compute pact

What The Chip: On September 8, 2025, Nebius signed a multi‑year AI infrastructure agreement with Microsoft: $17.4B initial value, expandable to ~$19.4B depending on uptake. Capacity will come from Nebius’s new Vineland, New Jersey data center, with first delivery later this year.

Details:

💸 Deal size, term & mechanics. The agreement gives Microsoft access to dedicated GPU capacity delivered in tranches during 2025–2026. The 6‑K pegs total contract value at about $17.4B through 2031, with options that take it to ~$19.4B. It also lays out service‑level commitments, liquidated damages for late delivery, and termination rights if scheduled capacity slips after a grace period.

🗺️ Where the GPUs live. All of this capacity is tied to Vineland, NJ—a build‑to‑suit facility that Nebius says can scale to ~300 MW. The 20‑F already disclosed the site will be dedicated to NVIDIA Blackwell/Blackwell‑Ultra silicon, with initial capacity in 2H25. As of Mar 31, 2025, Nebius had ~30,000 GPUs deployed (mostly H200) and >22,000 Blackwell on order beginning 2025.

⚡ Power is the feature. Nebius and construction partner DataOne say Vineland will use “behind‑the‑meter” power—on‑site/adjacent generation to bypass grid bottlenecks—supporting the 300 MW design. That matters in New Jersey, where PSE&G’s large‑load queue surged to ~9.4 GW, ~90% from data centers. For local context, Vineland’s municipal utility reports ~120 MW of owned generation and ~164 MW peak load—underscoring why behind‑the‑meter is key at 300 MW scale.

🧾 Financing signal. Nebius says it will finance capex with cash flow from the contract plus debt secured by the agreement—classic contract‑backed financing that rides Microsoft’s credit. Note: In June 2025 Nebius also raised $1B via private convertible notes (2.00% 2029s / 3.00% 2031s), further broadening its capital stack.

🧪 Execution watch‑items. The 6‑K makes delivery milestones explicit and conditions the start of obligations on Nebius securing required financing. Miss a delivery (post‑grace), and Microsoft can terminate that tranche. Translation: timelines, power commissioning, and Blackwell ramps must line up.

🤝 Third‑party capacity diversification. For Microsoft, Nebius broadens third‑party AI capacity beyond CoreWeave—part of a wider “neocloud” approach alongside CoreWeave, Lambda, Crusoe, and Voltage Park. CoreWeave, for example, has a separate $11.9B/5‑year contract with OpenAI. Expect this multi‑sourcing to continue.

🟩 NVIDIA‑centric stack = incremental NVDA tailwind. Nebius’s NJ site is Blackwell‑oriented; GB200/NVL72 systems are liquid‑cooled, rack‑scale and heavily use NVLink + InfiniBand—knock‑on demand for NVIDIA networking and advanced cooling.

🏗️ Matches Big Tech commentary. Microsoft told investors it stood up >2 GW of new capacity in the last year and made every Azure region “AI‑first” with liquid‑cooling support. Alphabet just lifted 2025 capex to ~$85B, and Amazon flagged constraints in power, chips, and server yields. This deal fits that push for “capacity wherever it can come online fast.”

CEO Arkady Volozh (Nebius founder; previously co‑founded Yandex) put it plainly: “The economics of the deal are attractive in their own right,” and he expects it to accelerate the AI cloud business in 2026.

Why AI/Semiconductor Investors Should Care

This is contracted demand for NVIDIA Blackwell‑class infrastructure and a template for contract‑backed financing in the AI buildout—lowering NBIS’s cost of capital while providing Microsoft with time‑to‑capacity in a power‑constrained market. The binding constraint is megawatts, not just GPUs; Nebius’s behind‑the‑meter plan de‑risks timelines, but New Jersey’s evolving policy scrutiny of data‑center energy use is a live variable for schedules and returns. Net‑net: bullish for NVIDIA’s data‑center cycle, positive for Microsoft’s Azure capacity smoothing, and execution‑sensitive for Nebius on power, delivery, and Blackwell ramps

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] Ambiq Q2’25: 13.6% QoQ Growth, IPO Fuels Edge AI Push

Date of Event: September 4, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

Intro (Executive Summary)

Ambiq Micro, Inc. (NYSE: AMBQ), a designer of ultra‑low‑power chips for edge artificial intelligence (AI), reported second‑quarter 2025 net sales of $17.9 million, up 13.6% sequentially from Q1’s $15.7 million and down versus $20.3 million a year ago. Non‑GAAP gross profit rose to $7.6 million with a 42.7% margin (vs. 47.1% in Q1 and 32.9% in Q2’24). The company posted a non‑GAAP net loss of $5.9 million (or $(0.43) per share on 13.6 million unaudited pro forma shares). GAAP net loss was $8.5 million.

Management highlighted two developments: (1) Ambiq completed an upsized IPO of 4.6 million shares at $24, yielding $97.2 million in net proceeds; and (2) it expanded the Apollo5 SoC family with the new Apollo510B wireless system‑on‑chip. CEO Fumihide Esaka called the IPO “the next chapter of Ambiq’s journey” to “bring intelligence to edge devices,” adding that Ambiq’s chips have been incorporated into more than 280 million devices to date. On product direction, he said Apollo510B integrates a low‑power Bluetooth radio, making it “an ideal solution for always‑on, connected edge devices.” Looking ahead, Esaka said the IPO adds the capital to “broaden our customer base” and “penetrate new end markets and geographies.”

Growth Opportunities

Diversifying customers and geographies. Ambiq continues to pivot away from historical concentration in Mainland China. Net sales to end customers in China were 11.5% in Q2’25, down from 42% in Q2’24 (a reduction of 30.5 percentage points year over year). Management framed this as a deliberate strategy to pursue “higher value opportunities” in other regions. The company noted that this mix shift, combined with product and customer selection, contributed to the year‑over‑year improvement in non‑GAAP gross profit dollars.