AI Robotics Explosion: NVIDIA’s Jetson Thor Breakthrough, Aehr's Burn-in Boom, and IREN’s Bold GPU Bet

Welcome, AI & Semiconductor Investors,

Is NVIDIA's Jetson Thor about to redefine what's possible at the physical edge? With top adopters like Amazon, Boston Dynamics, and Figure. Meanwhile, Aehr's hyperscaler customer doubled down with rapid-fire burn-in orders, signaling massive demand for AI chips. As IREN secures financing to scale its Blackwell GPU cloud dramatically — Let’s Chip In

What The Chip Happened?

🤖 Jetson Thor Goes GA: Blackwell Brings Real‑Time Robot Reasoning to the Edge

🔥 Aehr’s Hyperscaler Doubles Down on AI Chip Burn‑In Orders

🚀 IREN Doubles Its Blackwell Bet: 4,200 New GPUs, $102M Lease Secured

[Analog Devices Q3 FY25: Broad Growth, Robotics Tailwinds]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

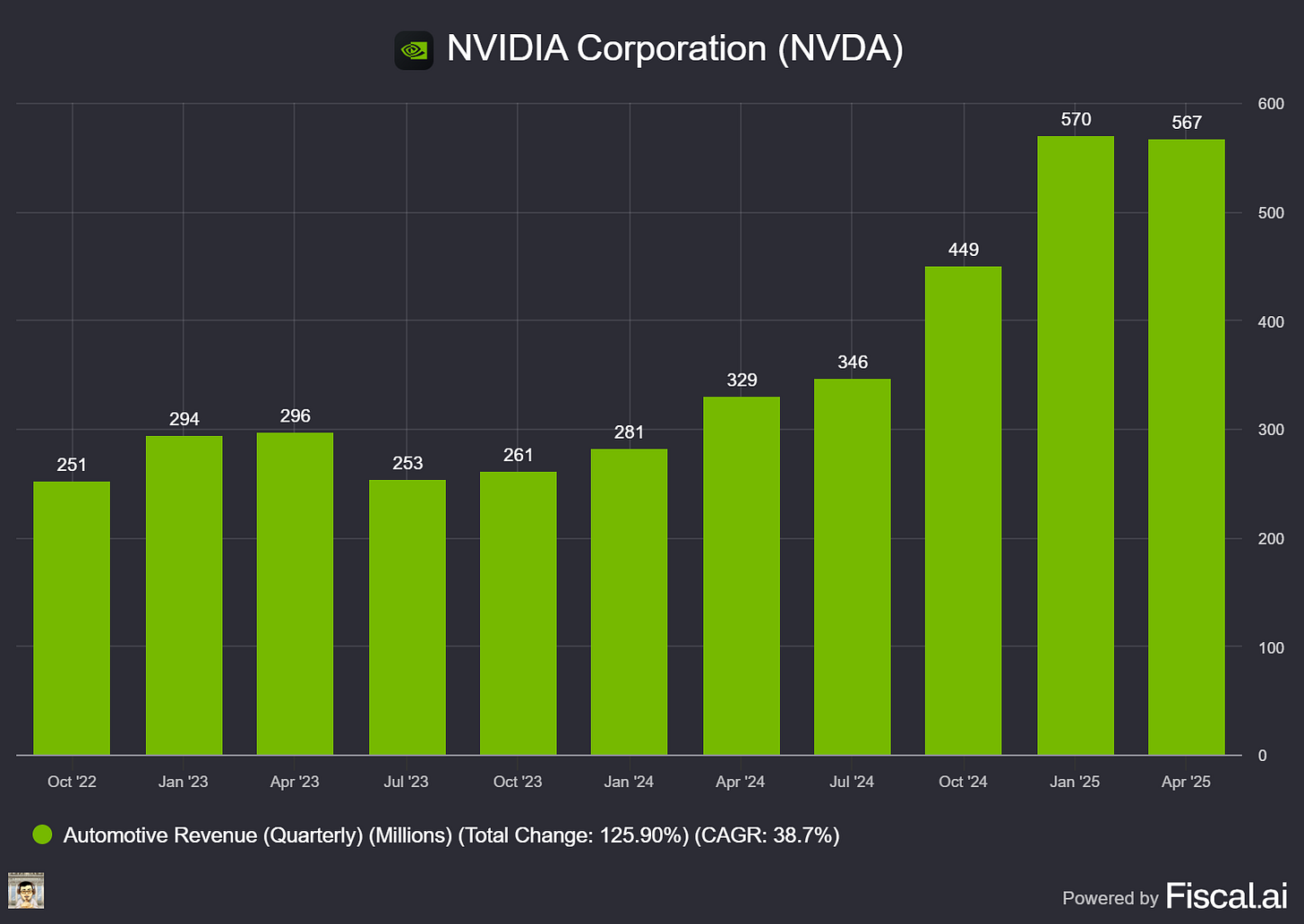

NVIDIA (NASDAQ: NVDA)

🤖 Jetson Thor Goes GA: Blackwell Brings Real‑Time Robot Reasoning to the Edge

What The Chip: On August 25, 2025, NVIDIA made the Jetson AGX Thor developer kit and Jetson T5000 production modules generally available, bringing Blackwell‑class compute to edge robots. Thor delivers up to 2,070 FP4 TFLOPs in a ~130W envelope—7.5× the AI compute and 3.5× better energy efficiency than Orin—unlocking real‑time reasoning for physical AI across humanoids, industry, healthcare, and logistics.

Details:

💵 GA & Pricing: Jetson AGX Thor developer kit starts at $3,499; Jetson T5000 modules start at $2,999 (1,000‑unit pricing). NVIDIA DRIVE AGX Thor developer kit is open for preorder with deliveries slated for September.

🧠 Compute & Memory: Up to 2,070 FP4 TFLOPs, 128GB memory, 3.1× CPU uplift and 2× memory vs. Orin—designed to run multiple generative and perception models concurrently at the edge within mobile‑robot power limits (~130W).

🧩 Stack & Models: Built on the full Jetson software stack with Isaac, Isaac GR00T N1.5 (humanoid foundation), Metropolis (vision‑AI agents), and Holoscan (sensor processing). Supports popular LLM/VLM/VLA models (Cosmos Reason, DeepSeek, Llama, Gemini, Qwen), with expected gains from FP4 and speculative decoding optimizations (plain‑English: FP4 = 4‑bit floating point for faster, cheaper inference; speculative decoding uses a small “draft” model to speed a larger model).

🤝 Adopters & Evaluations: Early adopters include Agility Robotics, Amazon Robotics, Boston Dynamics, Caterpillar, Figure, Hexagon, Medtronic, Meta; evaluations at 1X, John Deere, OpenAI, Physical Intelligence—broad signal across logistics, construction/mining, agriculture, medical, and consumer AI agents.

🗣️ What leaders say: Jensen Huang calls Jetson Thor “the ultimate supercomputer to drive the age of physical AI and general robotics.” Brett Adcock (Figure CEO) says its “server‑class performance” lets humanoids “perceive, reason and act in complex, unstructured environments.” Peggy Johnson (Agility Robotics CEO) expects a step‑change in Digit’s real‑time responsiveness.

🌐 Ecosystem Scale: NVIDIA cites 2M+ developers, 150+ hardware/software/sensor partners, and 7,000+ Orin customers—an on‑ramp that should compress time‑to‑deployment for Thor.

⚠️ Watch Items (Bearish/Neutral): ~130W still stresses thermals and battery life for mobile platforms; export‑control regimes may limit some customers; pricing premiums vs. legacy SoCs could slow adoption in cost‑sensitive fleets.

Why AI/Semiconductor Investors Should Care

Jetson Thor brings data‑center‑class inference to the edge, expanding NVDA’s TAM into physical AI—where latency, reliability, and safety are non‑negotiable. Blue‑chip adopters plus a mature Isaac/Jetson stack lower integration risk and support module + software monetization across robotics, industrial automation, and healthcare. The swing factor is whether humanoids/robotics ramp fast enough to turn Thor into a high‑margin growth vector.

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

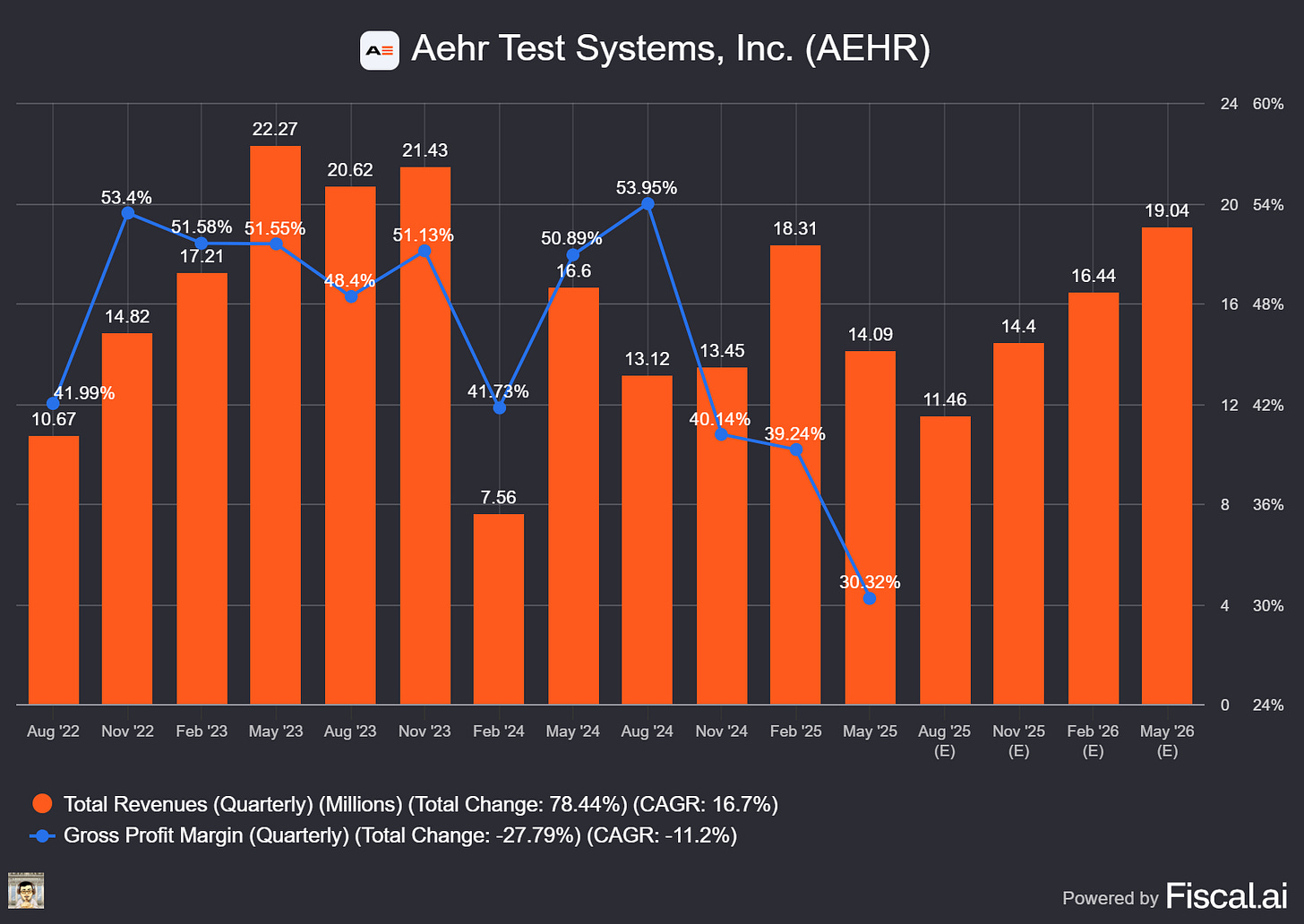

Aehr Test Systems (NASDAQ: AEHR)

🔥 Aehr’s Hyperscaler Doubles Down on AI Chip Burn‑In Orders

What The Chip: On Aug 25, 2025, Aehr said a world‑leading hyperscaler placed six more orders for its Sonoma ultra‑high‑power packaged‑part burn‑in systems to support high‑volume AI processor production, shipping from Fremont, CA, over the next two quarters.

Details:

📦 What was ordered & why: The customer, described as a top hyperscaler building its own AI processors, will use the systems for production test and burn‑in to screen early‑life failures before chips hit the data center. Shipments will occur over the next two quarters from Aehr’s Fremont facility.

➕ Ramp accelerating: This follows eight Sonoma systems ordered last month, taking this hyperscaler to 14 systems in ~5 weeks, a strong signal of capacity expansion.

🔋 High‑power envelope: Sonoma supports up to 2,000W per device and configurations with up to 88 processors per system, aligning with the extreme power densities of modern AI accelerators.

🧪 What burn‑in does (in plain English): Burn‑in runs chips under elevated heat/voltage for hours to precipitate early‑life (“infant‑mortality”) failures, improving field reliability for 24/7 data‑center duty.

🌐 Context: hyperscalers roll their own silicon: Microsoft’s Maia accelerators, AWS Trainium2, Google TPU v5p/Trillium, and Meta’s MTIA underscore the in‑house ASIC trend Aehr is tapping.

📊 Market sizing (and how to sanity‑check it): Aehr cites S&S (SNS) Insider projecting AI chips growing from ~$61B (2023) to ~$621B (2032) (~29–30% CAGR). Other reputable views vary widely. McKinsey sees semis reaching $1T by 2030 with GenAI adding up to $300B, while TD Cowen pegs the AI‑processor market at ~$334B by 2030, so treat forecasts as ranges, not absolutes.

⚠️ What to watch (risk):

• Customer concentration & lumpiness: Aehr still references a “lead” AI customer.

• Policy overhang: The U.S. has floated tariffs up to 100% on imported chips; industry group SIA is seeking clarity on scope/exemptions. Aehr’s U.S. manufacturing helps, but supply chains are global.

Why AI/Semiconductor Investors Should Care

This is another tangible proof‑point that hyperscaler ASIC programs are moving from pilot to scaled production, and that reliability infrastructure (burn‑in) scales with it. For Aehr, packaged-part burn-in (Sonoma) complements its wafer-level portfolio (FOX-XP), expanding the company’s TAM into AI accelerators—Aehr even highlights initial production wins moving AI system-level burn-in to wafer level for cost/scale benefits. If this hyperscaler’s roadmap (and others’) keeps expanding devices and volumes, follow‑on fixtures/modules and additional systems can drive bookings, but balance that upside against order timing risk and policy uncertainty.

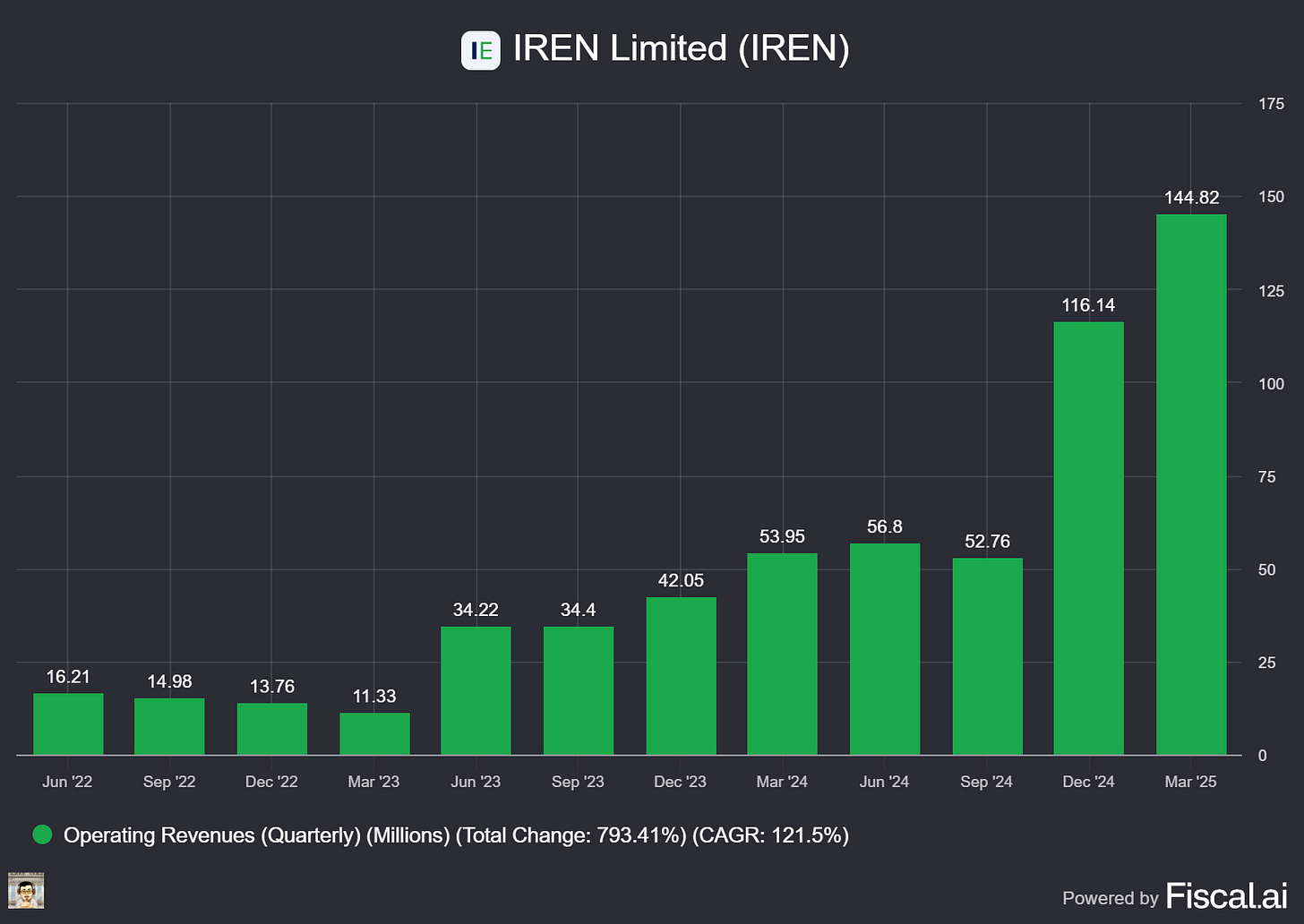

IREN (NASDAQ: IREN)

🔥 IREN Doubles Its Blackwell Bet: 4,200 New GPUs, $102M Lease Secured

What The Chip: On August 25, 2025, IREN said it bought 4,200 NVIDIA Blackwell B200 GPUs and locked in $102M of 36‑month, non‑dilutive lease financing for an earlier Blackwell order—doubling its AI Cloud to ~8,500 NVIDIA GPUs. New B200s will go into the company’s Prince George (50MW) campus.

Details:

💾 Deal specifics. IREN is paying ~$193M (servers + InfiniBand + cabling included) for 4.2k B200s. That implies an all‑in unit cost ~$46k/GPU (193/4,200). Financing talks for this batch are ongoing; the initial commitment came from existing cash.

🧾 Financing terms (prior order). IREN secured $102M of financing for its prior B200/B300 purchase via a 36‑month lease covering 100% of the GPU purchase price, with a high single‑digit interest rate. Management framed this as non‑dilutive capital.

🧠 Fleet mix now ~78% Blackwell. AI Cloud expands to ~8.5k GPUs: 0.8k H100, 1.1k H200, 5.4k B200, 1.2k B300. (5.4k+1.2k = 6.6k Blackwell, ~78% of fleet.)

🏗️ Power & runway. The Prince George, BC site has 50MW and, per IREN’s reference assumptions (PUE 1.1; ~1.93kW/GPU incl. ancillary draw), can support ~20,000 Blackwell GPUs over time. Rough math suggests IREN’s 8.5k GPUs would need ~16MW at those assumptions, leaving growth headroom.

⛏️ Bitcoin mining stays at ~50 EH/s. IREN expects installed mining capacity of ~50 EH/s to remain by re-allocating miners to other sites, limiting disruption to its core self-mining operations.

🚀 Why Blackwell matters (in plain English). NVIDIA’s Blackwell generation (B200/B300) trains and serves bigger models faster than Hopper. NVIDIA reports up to ~3.4× per‑GPU inference gains vs H200 on large LLMs in MLPerf tests—useful for both training and high‑throughput inference.

🧭 Things to watch. (i) Utilization & pricing: revenue lift hinges on keeping these GPUs booked at attractive rates; (ii) Financing for the new 4.2k B200s is not finalized; (iii) Supply/delivery cadence for Blackwell remains tight as the 2025 ramp continues (industry trackers see Blackwell dominating 2025 high‑end shipments).

🗣️ Management voice. Co‑founder & Co‑CEO Daniel Roberts said the “expanded Blackwell capacity positions IREN to capture demand and drive the next phase of AI Cloud revenue growth.”

Why AI/Semiconductor Investors Should Care

For IREN, this is a scale and mix shift: moving from a bitcoin‑first operator toward a GPU‑rich AI Cloud with a Blackwell‑heavy fleet that can price at premium rates when demand is tight. The non‑dilutive, lease‑based financing reduces equity risk while freeing cash for further growth, and the 50MW Prince George runway offers a clear expansion path toward ~20k GPUs. The flip side: returns will depend on time‑to‑deploy, utilization, and all‑in cost of capital as Blackwell availability ramps and market pricing evolves. If IREN executes, the move could diversify revenue and de‑risk exposure to bitcoin cycles; if not, investors face under‑utilized capex and margin pressure.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] Analog Devices Q3 FY25: Broad Growth, Robotics Tailwinds

Date of Event: August 20, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

Analog Devices (NASDAQ: ADI) reported fiscal third‑quarter 2025 revenue of $2.88 billion, up 25% year over year and 9% sequentially, with double‑digit growth across all end markets. Adjusted gross margin reached 69.2% and adjusted operating margin came in at 42.2%, driving adjusted EPS of $2.05. Trailing twelve‑month operating cash flow totaled $4.2 billion (about 40% of revenue) and free cash flow reached $3.7 billion (35% of revenue). The company returned $1.6 billion to shareholders in Q3 via $0.5 billion in dividends and $1.1 billion in buybacks; the Board declared a $0.99 quarterly dividend payable September 16, 2025 to holders of record on September 2, 2025.

Management said results exceeded the high end of expectations despite geopolitical uncertainty. CEO and Chair Vincent Roche stated, “ADI’s third‑quarter revenue and earnings per share exceeded the high end of our expectations… our diverse and resilient business model enables ADI to navigate various market conditions and consistently create long‑term value for our shareholders.” CFO Richard Puccio added, “We closed the third quarter with continued backlog growth and healthy bookings trends, notably in the Industrial end market.”

Looking ahead to fiscal Q4, ADI guides revenue to $3.0 billion ± $100 million, with reported operating margin ~30.5% and adjusted operating margin ~43.5%. Management is planning for reported EPS of $1.53 ± $0.10 and adjusted EPS of $2.22 ± $0.10.

Growth Opportunities

Industrial automation and advanced robotics. Industrial accounted for 45% of Q3 sales, up 12% sequentially and 23% year over year, and management expects it to grow low‑to‑mid teens sequentially in Q4, a period that is typically seasonally down. Roche highlighted accelerating demand across instrumentation, automation, health care, aerospace and defense, and energy management. He focused on the $1‑billion‑plus industrial automation business, which recently returned to double‑digit growth and, by ADI’s assessment, can double by 2030 given the opportunity pipeline.