Hyperscaler Hunger: Aehr’s AI Burn-In Surge, Vertiv’s Power Leap, and OpenAI’s Gigawatt Gamble

Welcome, AI & Semiconductor Investors,

What happens when AI compute scales from gigawatts to gigadollars? Aehr Test Systems sees explosive demand as hyperscalers rush for high-volume AI burn-in systems; Vertiv positions itself at the center of Nvidia’s massive push toward 800V infrastructure; and OpenAI redefines the AI landscape with transformative multi-gigawatt chip deals. As compute intensity climbs, the stakes for semiconductor investors are rising even faster.— Let’s Chip In.

What The Chip Happened?

🔥 Aehr Test Systems: Hyperscaler Burn‑In Orders Signal AI Ramp

⚡ Vertiv + NVIDIA push 800V DC from concept to “engineering‑ready” for AI factories

🚀 OpenAI’s Three‑Way Chip Play: From Buyer to Builder

[Aehr Test Systems Starts Fiscal 2026: AI Buzz Builds, Margins Take a Hit]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Aehr Test Systems (NASDAQ: AEHR)

🔥 Aehr Test Systems: Hyperscaler Burn‑In Orders Signal AI Ramp

What The Chip: On October 6, 2025, Aehr reported Q1 FY26 results showing smaller revenue but growing momentum in AI chip test & burn‑in across both package and wafer levels. A “world‑leading hyperscaler” placed follow‑on production orders for Aehr’s new Sonoma packaged‑part burn‑in systems, while Aehr highlighted the first production WLBI (wafer‑level burn‑in) systems for AI processors at a premier OSAT.

Details:

⚡ Hyperscaler ramp on Sonoma: A lead hyperscaler “placed multiple follow‑on volume production orders…and requested shorter lead times to support higher‑than‑expected volumes,” with plans to expand capacity and “introduce new AI processors…to be tested and burned in on our Sonoma platform” at a top OSAT, said CEO Gayn Erickson. Aehr is co‑developing burn‑in for future gens at both package and wafer levels.

🧪 WLBI beachhead at scale: Aehr says it delivered the world’s first production WLBI systems for AI processors, installed at a premier global OSAT—a visible showcase that is already drawing approaches from other AI suppliers. Aehr is also partnering with this OSAT on advanced wafer‑level test & burn‑in for HPC/AI, offering a turnkey path from design to high‑volume production.

🛠️ Power & automation upgrades: Since acquiring Incal, Aehr raised Sonoma’s power‑per‑device to 2,000W, increased parallelism, and added full automation via an integrated device handler. Ten companies visited Fremont last quarter to see the enhanced system; feedback was “very positive,” and management expects these features to drive new applications and orders.

📡 Silicon photonics & HDD tailwinds: Aehr upgraded a major silicon photonics customer’s FOX‑XP to 3.5kW per wafer in a nine‑wafer configuration with a fully integrated WaferPak Aligner for single‑touchdown wafer‑level burn‑in—more orders expected this fiscal year. In storage, multiple FOX‑CP systems shipped to a top HDD supplier to stabilize next‑gen read/write heads as AI data growth boosts HDD demand.

⚙️ Power semis (GaN & SiC): Engagements expanded in GaN (data center power, auto, energy); Aehr is building a “large number” of WaferPaks for new designs. SiC growth should skew 2H FY26 with upgrades, WaferPaks, and capacity additions. Aehr highlighted shipment of its first 18‑wafer high‑voltage FOX‑XP (±2,000V) to test 100% of EV inverter devices in one pass.

🧠 Memory opportunity—HBF on deck: Aehr’s NAND WLBI benchmark progressed with a new fine‑pitch WaferPak. Meanwhile, High‑Bandwidth Flash (HBF)—pitched as 8–16× the capacity of HBM at similar cost—is shifting tester requirements upward in power and parallelism; Aehr is preparing proposals to meet these needs within its 18‑wafer FOX‑XP infrastructure. Separately, a paid WLBI evaluation with a top‑tier AI processor supplier includes a custom high‑power WaferPak and production test program.

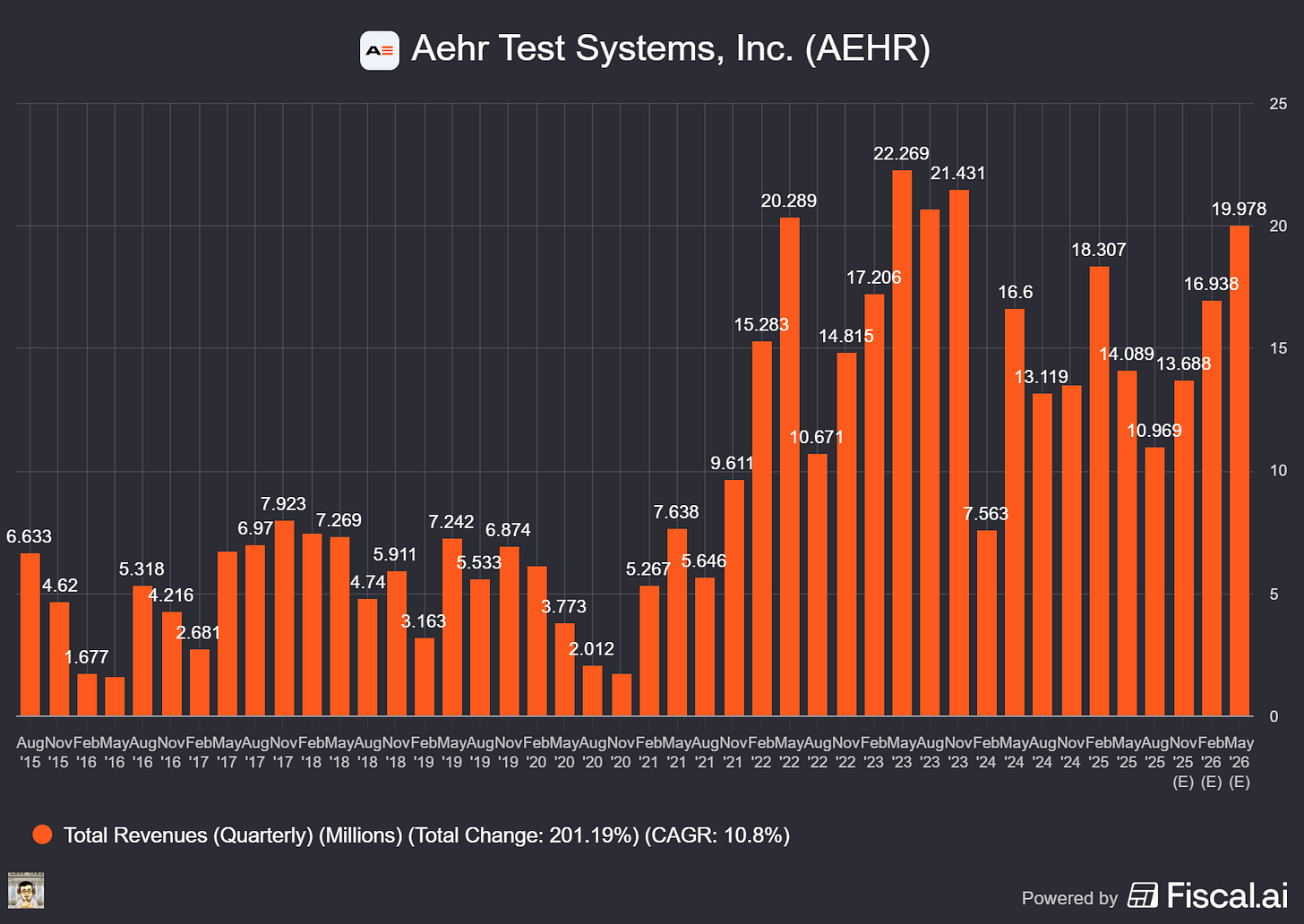

💰 Numbers that matter (Q1 FY26, ended Aug 29): Revenue $11.0M (↓ 16% YoY); GAAP net loss $(2.1)M or $(0.07); non‑GAAP net income $0.2M or $0.01. Non‑GAAP GM 37.5% (down from 54.7% on lower volume/mix and more third‑party content). Bookings $11.4M (~1.04× book‑to‑bill); backlog $15.5M, effective backlog $17.5M. Cash $24.7M, no debt. Facility renovation now complete ($6.3M total), boosting manufacturing capacity ≥5×. CFO Chris Siu: “We believe investment in this facility renovation has increased our overall manufacturing capacity by at least 5x…and we are more ready than ever to support the growth of our customers.”

🧭 Guidance & watch‑outs: Management beat Street for the quarter but withheld formal guidance due to 2025 tariff‑related uncertainty. Mix is a swing factor: consumables were $2.6M (24%) vs $12.1M (92%) last year’s Q1, pressuring margin. Concentration risk remains (lead hyperscaler), WLBI adoption/evaluations take time, and early AI programs can shift between package‑level and wafer‑level approaches. Still, Erickson summed it up: “We’re excited about the year ahead…nearly all our served markets will see order growth…though we remain cautious due to tariff‑related uncertainty.”

Why AI/Semiconductor Investors Should Care

Reliability is now a first‑order constraint in AI data centers—high‑power processors and advanced packages (multi‑die compute + HBM) can destroy yield if early failures slip through. Aehr sells the screening step: burn‑in at package level (Sonoma) and increasingly at wafer level (FOX‑XP). Shifting left (WLBI) can cut scrap of costly CoWoS‑style modules and reduce rack‑level burn‑in power/time—clear economics if volumes scale. The OSAT showcase, hyperscaler orders, and 5× capacity set Aehr up for multi‑year AI demand, with optionality in silicon photonics, HDD, GaN, and a potential HBF wave. Balance that with margin mix, order timing, and tariff risks—but if WLBI becomes standard for AI/HPC, Aehr’s high‑power niche looks strategically leveraged.

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Vertiv (NYSE: VRT)

⚡ Vertiv + NVIDIA push 800V DC from concept to “engineering‑ready” for AI factories

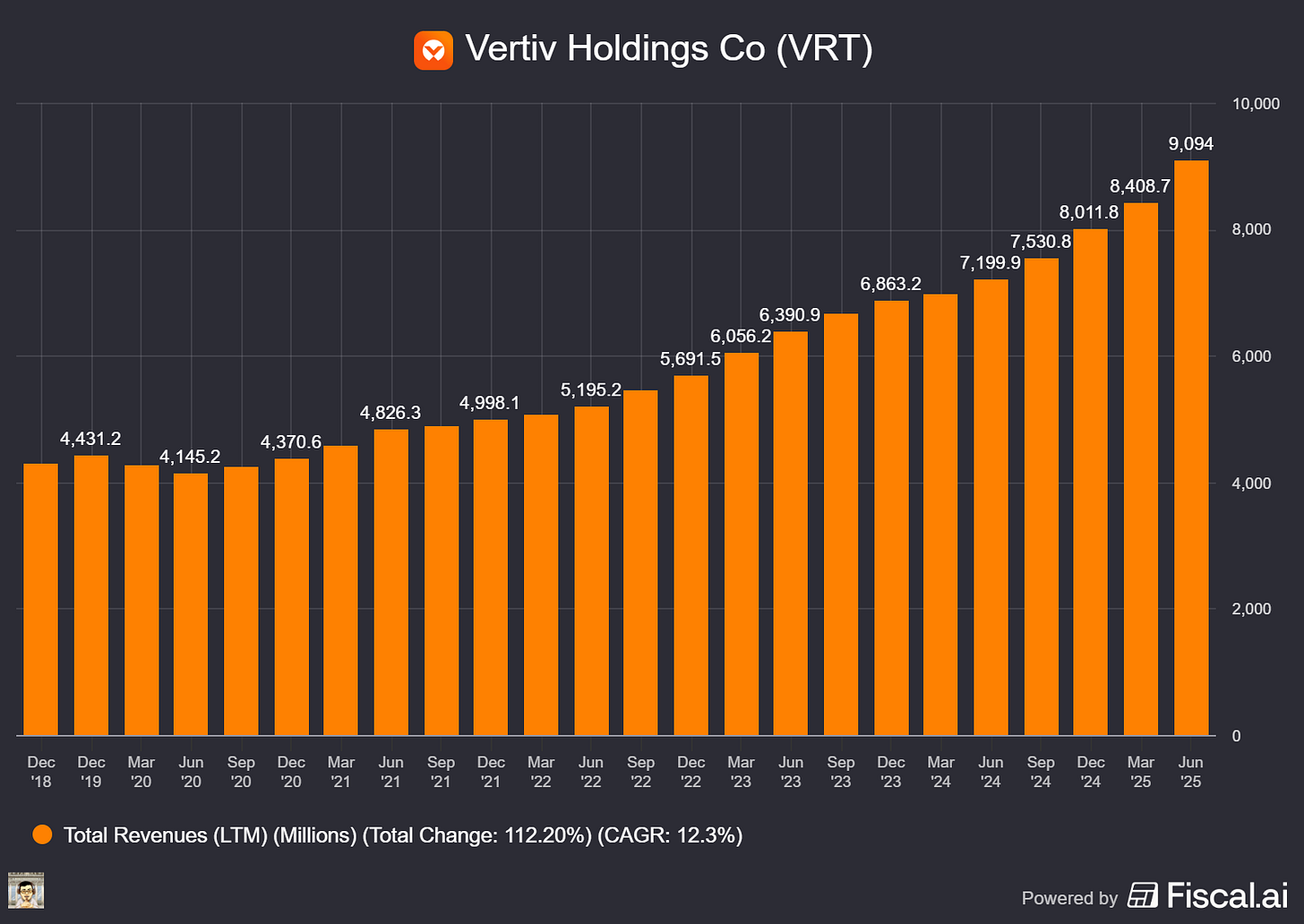

What The Chip: On October 13, 2025, Vertiv said its 800 VDC power platform has advanced from concept to engineering readiness, with first releases planned for 2H26—timed to support NVIDIA’s Rubin Ultra rollout in 2027. The work stems from a collaboration announced earlier this year.

Details:

🚀 Timeline & scope. Vertiv moved its 800 VDC “unit of compute” platform toward production, targeting H2’26 availability to match NVIDIA’s rack‑scale roadmap for Rubin Ultra in 2027.

🔌 Why 800 VDC now. Today’s 54 VDC in‑rack distribution—built for kilowatt racks—hits limits as AI racks push toward megawatt class. Higher voltage slashes current for the same power, which cuts resistive losses and copper mass while reducing conversion stages. NVIDIA frames 800 VDC as the path to support ≥1 MW per rack and improve end‑to‑end power efficiency (they cite up to ~5% efficiency gain and up to ~30% TCO reduction from architectural changes).

🧩 What Vertiv is building. System‑level platform designs include centralized rectifiers, high‑efficiency DC busways, rack‑level DC‑DC converters, and energy‑storage integration to stabilize large, synchronous AI loads.

🗣️ Management voice. Scott Armul (EVP, Vertiv) says, “Larger AI workloads are reshaping every aspect of data center design,” adding Vertiv’s AC/DC systems know‑how positions it to meet unprecedented power demand. NVIDIA’s Dion Harris underscores the need for a “fundamental shift in power architectures” to unlock next‑gen AI infrastructure.

🏭 Designs under test. Vertiv says its reference and product designs are being validated on real projects and scaled against gigawatt‑scale campus requirements—an early indicator that customers are planning for multi‑GW “AI factories.”

🛡️ Service moat. Safety and serviceability are pivotal at 800 VDC. Vertiv highlights its 4,000+ field engineers and DC/AC service track record as a differentiation point for mission‑critical operations.

📈 Market reaction. Shares of VRT jumped intraday to a record high on the news; Reuters cited a move to $184.36 intraday and ~7% up on the day.

🧠 NVIDIA tie‑in & scale. NVIDIA’s OCP‑tied roadmap points to Kyber racks connecting 576 Rubin Ultra GPUs by 2027, with broad ecosystem support for 800 VDC infrastructure—context for why power vendors like Vertiv are aligning now.

Why AI/Semiconductor Investors Should Care

Power has become the gating factor for AI compute. If 800 VDC really trims conversion losses and copper usage while enabling ≥1 MW racks, it lifts usable power density and floor‑space efficiency—key drivers of $/TFLOP capex and opex. That creates a larger TAM for Vertiv across rectification, busway, DC‑DC, energy storage, controls, and long‑tail services, and ties the company tightly to NVIDIA’s rack‑scale roadmap. Risks: execution and safety/standards at 800 VDC, a multi‑year product ramp (H2’26), and competitive responses from other power OEMs. But if NVIDIA’s claimed efficiency/TCO gains prove out at scale, 800 VDC becomes foundational to AI factory economics—and Vertiv’s early readiness positions it to capture outsized share of that transition.

OpenAI (Private), Broadcom (NASDAQ: AVGO)

🚀 OpenAI’s Three‑Way Chip Play: From Buyer to Builder

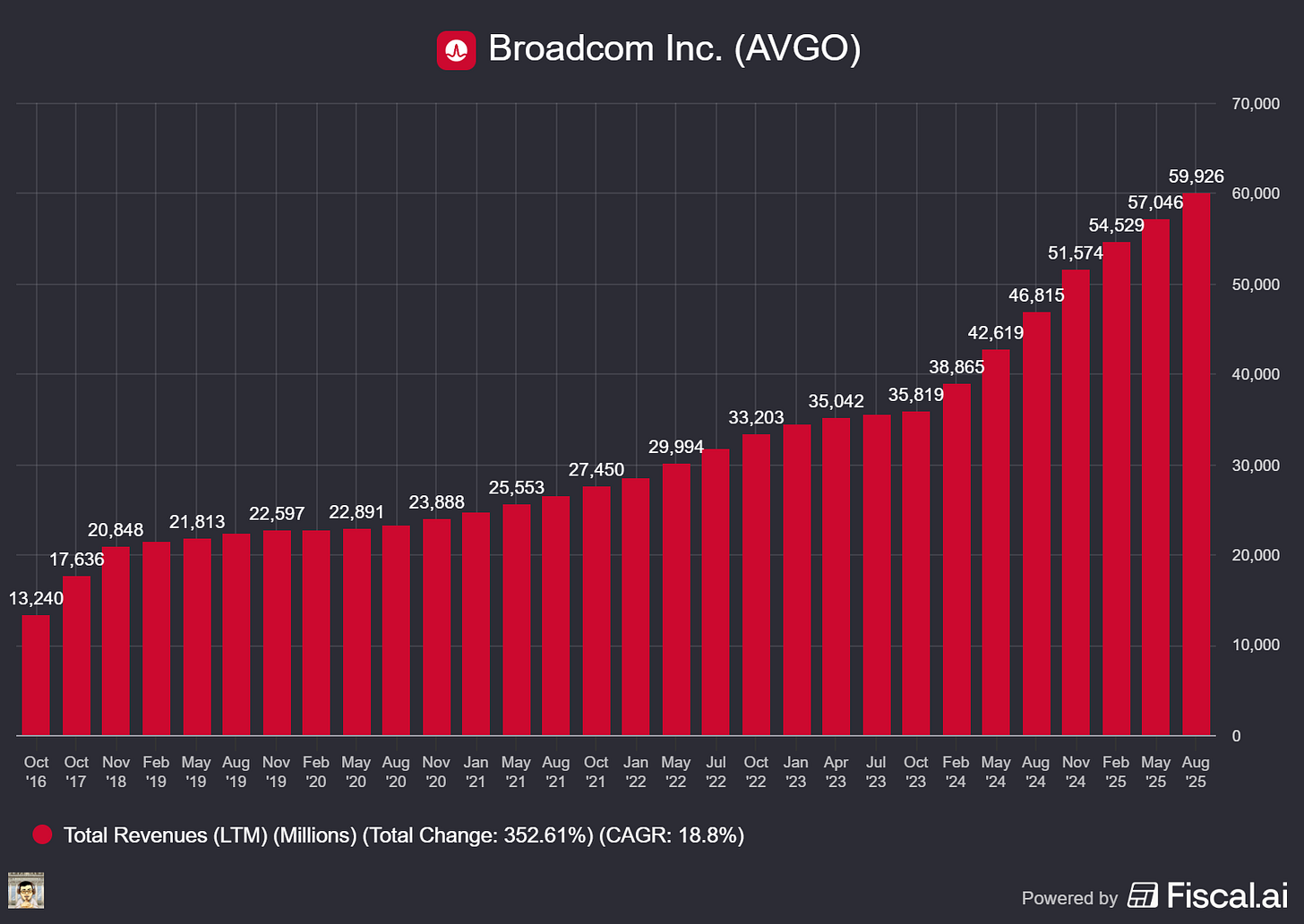

What The Chip: On October 13, 2025, OpenAI capped a three‑deal run to secure ~26 gigawatts (GW) of AI compute by 2029: 10 GW of custom inference silicon with Broadcom (announced today), 10 GW of NVIDIA systems backed by a $100 billion NVIDIA investment (Sept 22), and 6 GW of AMD GPUs tied to an equity warrant (Oct 6). The strategy shifts OpenAI from a pure software shop to a vertically integrated AI infrastructure operator.

Details:

🔧 Broadcom = custom chips + Ethernet at scale. OpenAI will co‑design and deploy 10 GW of OpenAI‑designed accelerators with Broadcom’s end‑to‑end Ethernet stack, with first racks arriving 2H26 and full rollout by end‑2029. As CEO Sam Altman put it, “Partnering with Broadcom is a critical step in building the infrastructure needed to unlock AI’s potential.” Broadcom CEO Hock Tan called it “a pivotal moment” as the companies co‑develop and deploy 10 GW of next‑gen accelerators and networks. OpenAI also disclosed “over 800 million weekly active users.”

🌐 Why Broadcom’s network matters. The systems lean on Broadcom’s Tomahawk switches and Jericho fabric to scale standards‑based Ethernet clusters far beyond a single GPU box. Jericho‑class silicon has been positioned to stitch hundreds of thousands to ~1 million accelerators across sites; Tomahawk Ultra/6 push radix and bandwidth for rack/cluster scale‑out. By contrast, NVIDIA’s NVLink domain tops out at 72 GPUs for all‑to‑all coupling—great for tight training, less ideal for internet‑scale inference sprawl.

🟩 NVIDIA = capital + compute. Under a letter of intent, OpenAI will deploy at least 10 GW of NVIDIA systems, starting 2H26 on the Vera Rubin platform; NVIDIA intends to invest up to $100 billion in OpenAI as each GW comes online. Jensen Huang called it “the biggest AI infrastructure project in history.” Importantly, OpenAI buys directly from NVIDIA for the first time (not via a cloud intermediary), giving OpenAI more control over cost and timing.

🟥 AMD = equity‑for‑access. OpenAI will deploy 6 GW of AMD Instinct GPUs over multiple generations, beginning with MI450 in 2H26. AMD granted OpenAI a warrant for up to 160 million shares (~10%), vesting on deployment, stock‑price, and technical milestones—directly aligning OpenAI’s financial upside with AMD’s success. AMD CFO Jean Hu framed the deal as “tens of billions of dollars in revenue” and accretive to EPS. Context: AMD’s MI300X has been powering Azure OpenAI Service since 2024, with Microsoft citing leading price/performance on GPT inference.

📊 Economics: enormous scale, real trade‑offs. Reuters pegs total all‑in data‑center costs at $50–$60 billion per GW; Broadcom’s custom route aims to lower unit inference cost versus off‑the‑shelf GPUs. At the same time, critics flag a “circular” AI economy—NVIDIA invests so OpenAI can buy NVIDIA; AMD gives OpenAI equity so OpenAI buys AMD—stoking bubble questions. Meanwhile, OpenAI disclosed 700 million weekly users in Sept (NVIDIA LOI) and 800 million today, but press reports show ~$5 billion 2024 losses on ~$4 billion revenue and a 2025 revenue outlook of ~$11.6–$12.7 billion. Execution must close the cash‑flow gap.

⚠️ Policy headwinds. New tariff threats (e.g., proposed 100% U.S. tariffs on China goods) and evolving AI chip export controls add supply‑chain and pricing risk for components (optics, substrates, HBM). These could raise TCO or delay deployments across 2026–2029.

Why AI/Semiconductor Investors Should Care

OpenAI just created a three‑lane moat around compute. If it works, OpenAI improves unit economics (custom chips + vendor leverage), reduces single‑supplier risk, and keeps pace with Big Tech peers building in‑house silicon. For investors:

NVDA gains demand certainty and equity exposure; the direct‑buy model should deepen wallet share even as it funds a key customer.

AMD gets a flagship anchor customer and strategic alignment via the warrant—powerful, but it raises execution risk (deliver 6 GW on time, scale software and networking).

AVGO monetizes both custom accelerators and AI Ethernet/optics, riding the secular pivot from proprietary fabrics to open, huge‑scale networks.

The bear case: circular financing and huge cash burn could unravel if AI monetization lags or policy shocks hit supply chains; first‑gen custom silicon often needs iterations. The bull case: 800 M weekly users, enterprise adoption, and lower inference cost curves compound into sustainable margins by the time H2‑2026 deployments come online. Today’s moves don’t just buy GPUs—they buy optionality across the full compute stack.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] Aehr Test Systems Starts Fiscal 2026: AI Buzz Builds, Margins Take a Hit

Date of Event: October 6, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

When you think of the AI gold rush, chipmakers like NVIDIA and AMD probably spring to mind first. But behind the scenes, companies like Aehr Test Systems (NASDAQ: AEHR) play a crucial role, supplying equipment to stress-test semiconductors, ensuring they survive the intensity of modern AI workloads. Aehr kicked off fiscal 2026 (ending August 29, 2025) with some mixed financial results but plenty of optimism for future growth.

Financial Snapshot

Revenue landed at $11.0 million, a 16% drop from last year’s $13.1 million. On a GAAP basis, Aehr posted a net loss of $2.1 million, or $0.07 per diluted share—a swing from the $0.7 million profit ($0.02 per share) in the same period last year. Non-GAAP earnings (which exclude things like stock-based compensation and restructuring costs) were just above breakeven at $0.2 million ($0.01 per share), significantly lower than last year’s $2.2 million ($0.07 per share).

On a brighter note, bookings hit $11.4 million, nudging past quarterly revenue and growing the backlog to $15.5 million. Adding early Q2 orders pushes this backlog even further to $17.5 million. Aehr closed the quarter with a solid cash cushion of $24.7 million, carrying no debt.

CEO Gayn Erickson put it plainly, “We finished ahead of street consensus for both revenue and our bottom line.” Translation: it wasn’t a perfect quarter, but better than Wall Street anticipated.

Riding the AI Wave

Aehr’s real story is the buzz building around its AI-driven products. The company’s new Sonoma systems, designed for packaged-part burn-in (think of this as quality control on steroids), continue to attract big players in the AI space. One “world-leading hyperscaler” (likely a major cloud giant) placed multiple follow-on orders, speeding up deliveries to handle growing demand for their own cutting-edge AI processors.