Micron’s AI Profits Surge, CoreWeave Rides NVIDIA’s $100B Wave, and OpenAI Expands Stargate Mega‑Build

Welcome, AI & Semiconductor Investors,

Did AI memory just become the hottest semiconductor segment? Micron’s record-breaking quarter and bullish guidance hint at a booming AI-driven memory market, while CoreWeave stock targets spike amid NVIDIA’s massive $100 billion OpenAI commitment. Meanwhile, OpenAI’s Stargate initiative accelerates toward a colossal 10-gigawatt milestone, raising the stakes (and rewards) for semiconductor investors.— Let’s Chip In.

What The Chip Happened?

🚀 Micron: AI Memory Boom Prints Records — and Guides Gross Margin Above 50%

📈 Street Targets Jump on CoreWeave Thanks to “10‑GW” Tailwind and NVIDIA Backstop

🎛️ OpenAI’s ‘Stargate’ Adds 5 U.S. Sites—24 Hours After Nvidia’s $100B Pledge

[Micron’s Record FY25: Data Center Mix Lifts Margins Above 50%]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Micron Technology (NASDAQ: MU)

🚀 Micron: AI Memory Boom Prints Records and Guides Gross Margin Above 50%

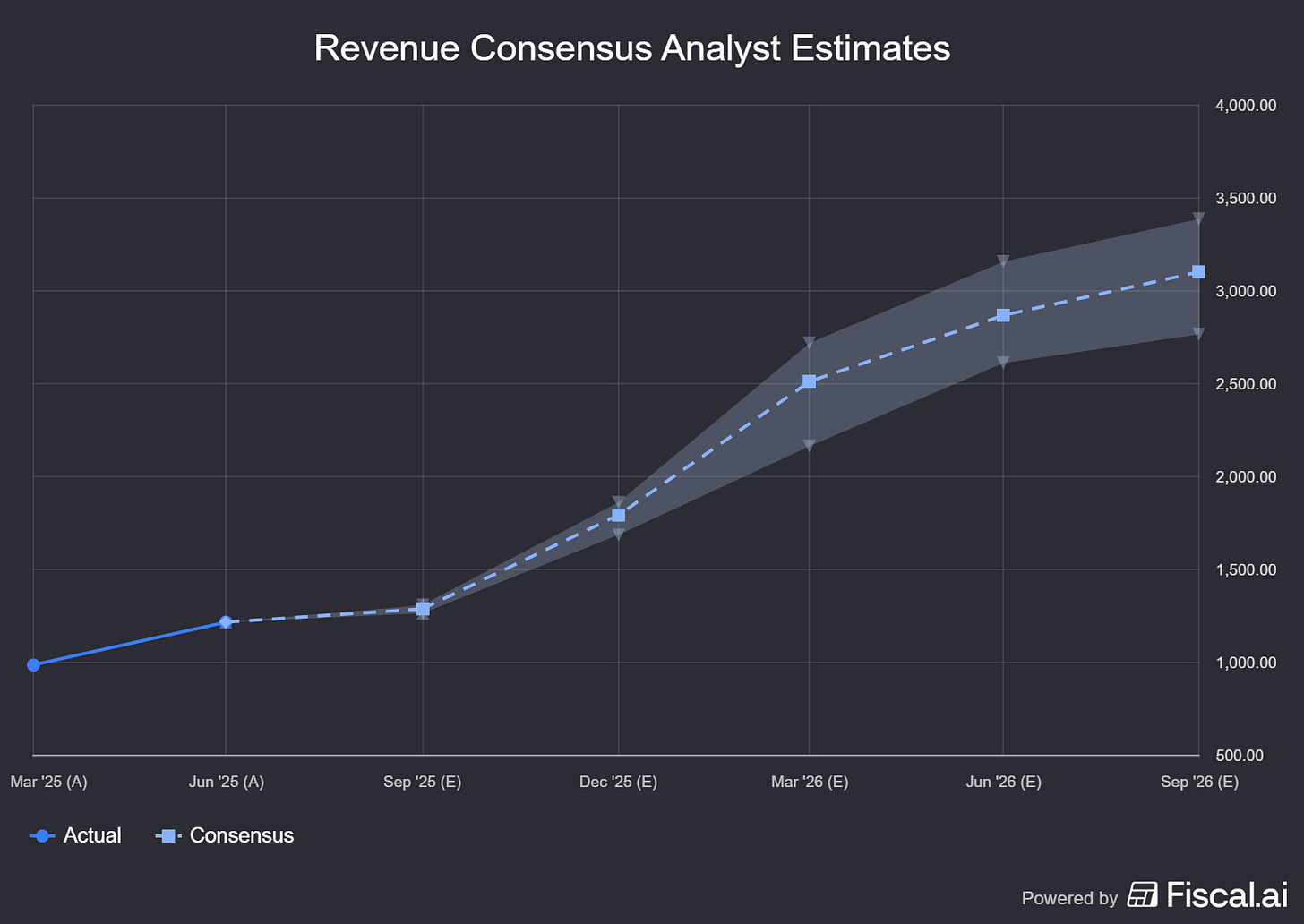

What The Chip: On September 23, 2025, Micron reported record fiscal Q4 and full‑year results as AI data‑center demand surged. Management guided FQ1’26 revenue to $12.5B ± $300M with non‑GAAP gross margin at 51.5% ± 1.0%, implying ~$1.2B sequential growth and continued pricing/mix tailwinds (source: Micron press release and earnings calls on Sept. 23).

Details:

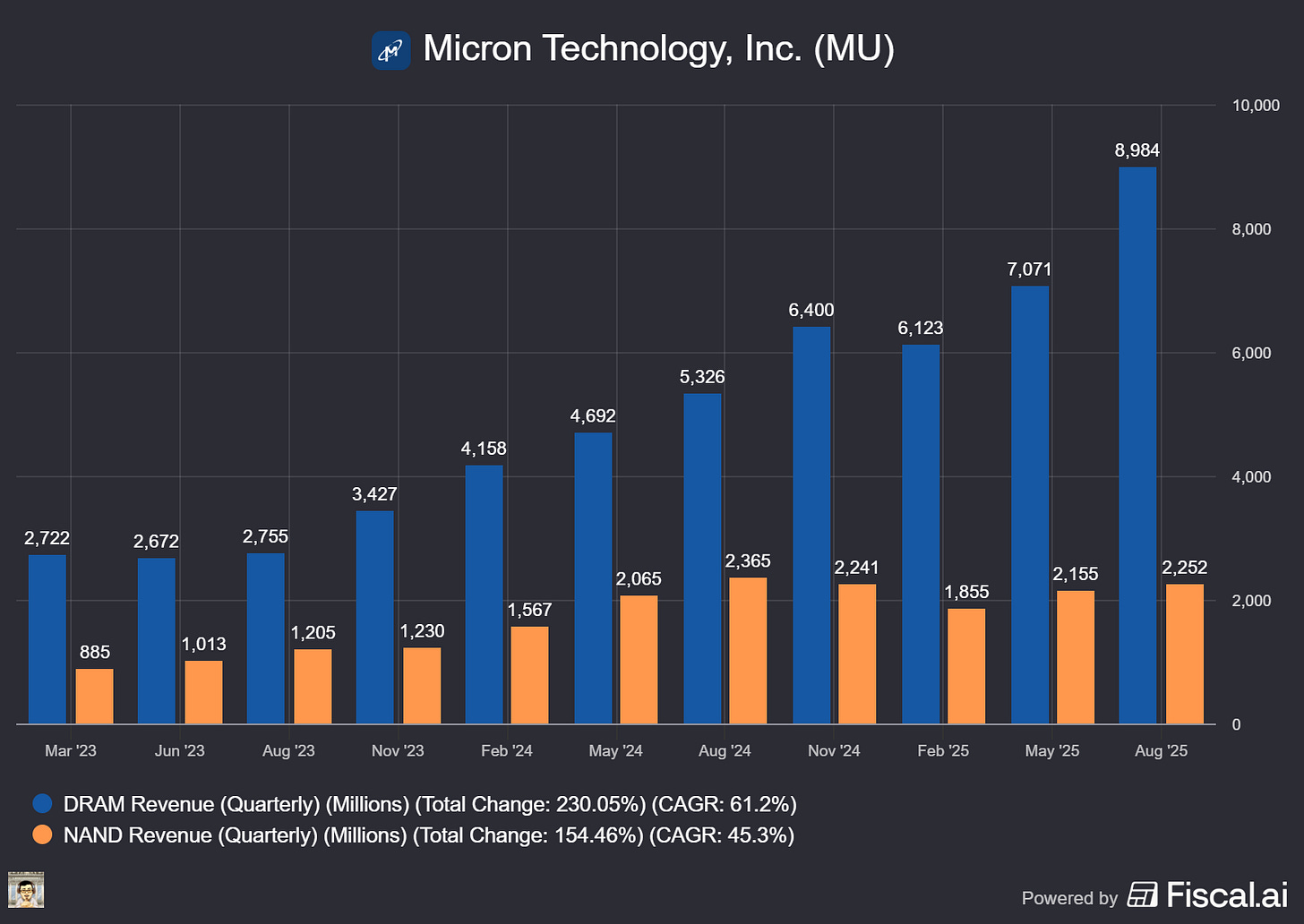

📈 Records across the board. FQ4 revenue $11.32B (+22% q/q, +46% y/y); non‑GAAP GM 45.7%; non‑GAAP EPS $3.03. For FY25: revenue $37.38B (+49% y/y), non‑GAAP GM 40.9%, EPS $8.29. CEO Sanjay Mehrotra called it a “record‑breaking fiscal year… entering FY26 with strong momentum.”

🧠 AI data center = profit engine. Data center reached 56% of company revenue in FY25 with ~52% GM. HBM (high‑bandwidth, stacked DRAM that sits close to the GPU to maximize memory bandwidth) hit nearly $2B in Q4 revenue (~$8B annualized run‑rate). Micron now has 6 HBM customers and has pricing agreements for most HBM3E 2026 supply; HBM share is on track to match overall DRAM share by CQ3’25 and grow in 2026.

⚙️ Tech execution & roadmap. 1‑gamma DRAM (next‑gen node for denser, more power‑efficient DRAM) reached mature yields 50% faster than the prior node and shipped first hyperscale revenue. HBM4 samples show >2.8 TB/s bandwidth and >11 Gb/s pin speeds; HBM4E (2027) will offer custom base‑logic dies via a TSMC partnership (higher‑margin options). G9 NAND (Micron’s latest 3D NAND) ramp continues, including first‑to‑market PCIe Gen6 data‑center SSDs.

🔌 Broader mix getting healthier. LPDDR5 for servers (low‑power DRAM adapted to servers to cut energy/heat) grew >50% q/q and Micron says it is sole LPDRAM supplier to NVIDIA’s current GB‑family data‑center platforms. GDDR7 (graphics DRAM tuned for high‑speed inference accelerators) is positioned for future AI systems.

🧮 Guidance = pricing power. FQ1’26 guide: $12.5B revenue midpoint, 51.5% non‑GAAP GM, $3.75 EPS. CFO Mark Murphy said pricing, mix, and cost reductions drive the step‑up and that gross margin should rise again in fiscal Q2.

🏭 Capacity & CHIPS update. FY25 net CapEx $13.8B; FY26 plan ~$18B (net), majority DRAM (1‑gamma ramps and new greenfield). Idaho fab received a CHIPS disbursement; first wafers 2H’27. Japan fab installed its first EUV tool for 1‑gamma (installation time was a company record). HBM assembly/test capacity continues to expand (Singapore adds supply from 2027).

💾 NAND improving from a low base. While NAND (storage) is still below prior‑cycle profitability, mix is shifting to high‑capacity data‑center SSDs (think 122TB/245TB class drives) as HDD shortages at hyperscalers push faster SSD adoption. Micron exited future mobile managed NAND to reallocate capital to higher‑ROI data‑center storage and DRAM.

💵 Cash & returns. Q4 OCF $5.73B; Q4 FCF $803M; FY25 FCF $3.72B. Cash/investments $11.94B; debt $14.6B (WAM 2033). Dividend $0.115/share (payable Oct 21, 2025; record Oct 3).

Why AI/Semiconductor Investors Should Care

Micron is demonstrating pricing power and mix uplift as AI‑centric memory (HBM, LPDDR5 server DIMMs, high‑cap DDR5) becomes a larger share of revenue—hence the >50% GM guide. Tight DRAM supply into 2026, disciplined NAND supply, and a credible HBM4 performance lead support durable margins and free‑cash‑flow growth.

Key watch‑outs: a bigger FY26 CapEx (~$18B net) raises execution/ROI stakes; Section 232 semiconductor tariffs are not in guidance; and the company must balance HBM vs. non‑HBM output while ramping 1‑gamma and new U.S./Japan capacity on time. If Micron hits on execution, the setup favors continued share gain in AI memory and through‑cycle margin expansion.

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

CoreWeave (NASDAQ: CRWV)

📈 Street Targets Jump on CoreWeave Thanks to “10‑GW” Tailwind and NVIDIA Backstop

What The Chip: Between Sep 18–24, 2025, four firms either upgraded or initiated CoreWeave with higher price targets—Wells Fargo to $170, Melius to $165, Loop Capital initiation at $165, Citizens JMP to $180—citing a longer, larger AI compute cycle, NVIDIA’s up‑to‑$100B LOI with OpenAI (10 GW), a new $6.3B take‑or‑pay capacity agreement from NVIDIA, and OpenAI’s contract expansion to ~$15.9B. Together, these moves de‑risk utilization and support a faster power/GPU build‑out.

Details:

💡 The upgrades (Sep 18–24):

• Wells Fargo (Michael Turrin) → Overweight, $170 (from $105): “demand too strong to ignore,” with an elevated build cycle and supply tightness persisting into 2026.

• Melius (Ben Reitzes) → Buy, $165 (from $128): frames valuation around ~23× 2027E EBIT and sees CoreWeave well‑positioned as AI cloud demand accelerates.

• Loop Capital (Ananda Baruah) → Initiate Buy, $165: calls CoreWeave a leading “neocloud” with “material P&L upside to Street.”

• Citizens JMP (Greg Miller) → Upgrade to Outperform, $180: bullish on GPU‑as‑a‑Service momentum and order visibility.

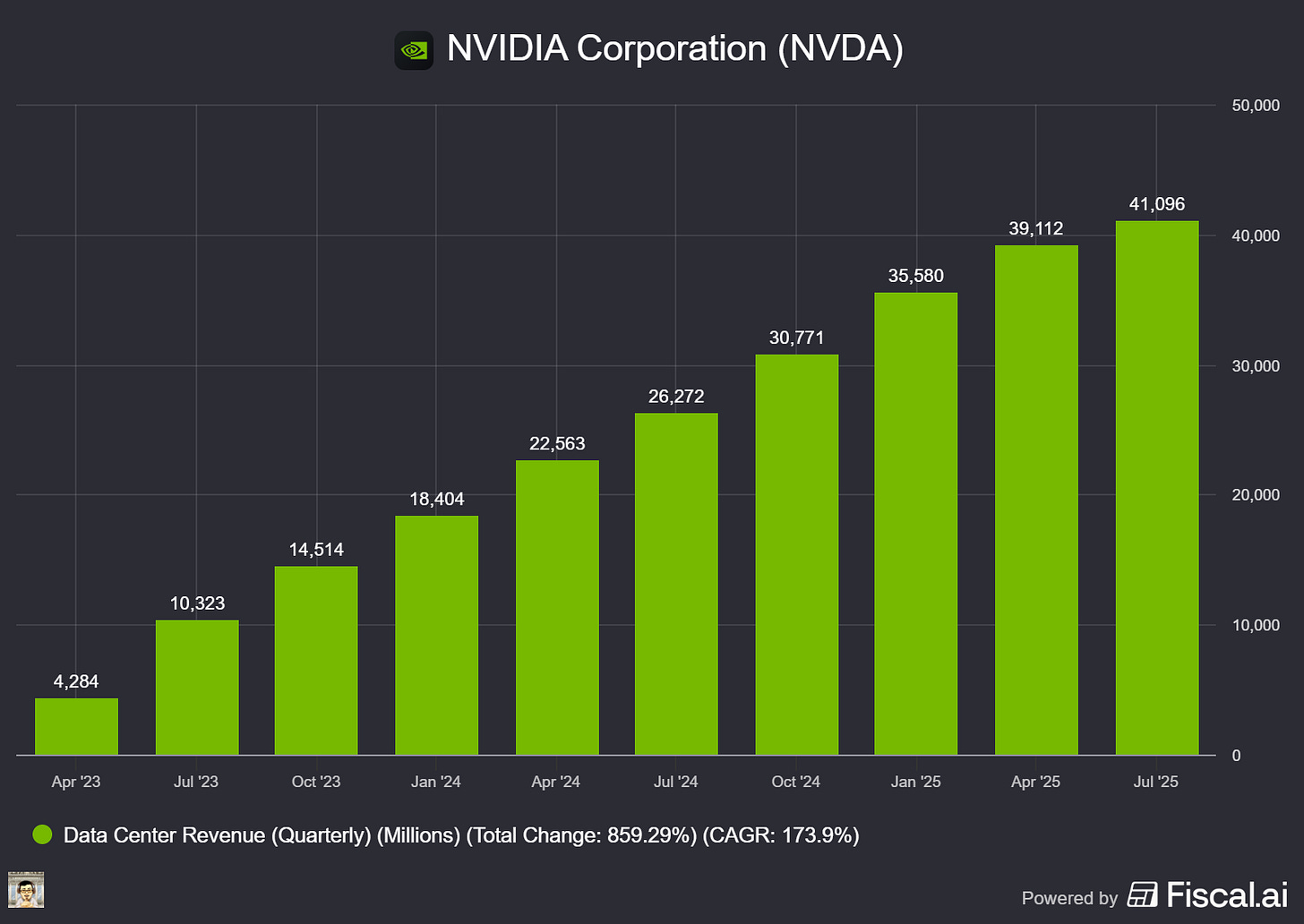

🧭 The 10-GW anchor: NVIDIA and OpenAI have signed a letter of intent to deploy at least 10 GW of NVIDIA systems, with up to $100 billion in staged investment by NVIDIA as capacity comes online. This is a multi-year demand signal for GPUs and adjacent AI clouds.

🛡️ Downside buffer: On Sep 15, NVIDIA agreed to buy CoreWeave’s residual unsold capacity through Apr 13, 2032 (initial value $6.3B), improving financing confidence and reducing utilization risk.

🤝 OpenAI demand already contracted (and growing): The $11.9B, five‑year OpenAI contract (Mar 10) was expanded by up to $4B in Q2 (through Apr 2029), taking the total potential to ~$15.9B.

⚡ Power positioning: Q2 snapshot—~470 MW active, ~2.2 GW contracted, and >900 MW targeted by year‑end. The pending Core Scientific all‑stock acquisition would add ~1.3 GW gross power plus 1+ GW of expansion potential—directly addressing the industry’s power bottleneck.

💬 Management voice: CEO Michael Intrator: “We are scaling rapidly to meet the unprecedented demand for AI…becoming the first to offer the complete Blackwell GPU portfolio at scale.” (Q2 press release).

🧾 Backlog foundation: At IPO, CoreWeave disclosed $15.1B RPO (Dec 31, 2024), largely multi‑year take‑or‑pay—giving visibility as capacity ramps. Q2 revenue printed ~$1.21B.

Why AI/Semiconductor Investors Should Care

These calls cluster around one idea: the AI compute super‑cycle just extended in size and duration, and CoreWeave has de‑risked a rapid capacity ramp via

(1) contract‑anchored demand (OpenAI $15.9B potential),

(2) a hard downside backstop (NVIDIA $6.3B take‑or‑pay through 2032), and

(3) power access at scale (Core Scientific ~1.3 GW).

That combination supports faster activation of power + GPUs—critical in a market where chips and electricity are the scarce commodities. Near‑term watch items: Q3 (projected ~Nov 11–12) for updates on active vs. contracted power, backlog, and utilization, plus any offtakes tied to the NVIDIA–OpenAI 10‑GW plan. Execution on power timelines and financing cadence will likely determine whether shares move toward the $165–$180 band highlighted this week.

Nvidia (NASDAQ: NVDA)

🎛️ OpenAI’s ‘Stargate’ Adds 5 U.S. Sites—24 Hours After Nvidia’s $100B Pledge

What The Chip: On September 23, 2025, OpenAI, Oracle, and SoftBank announced five new U.S. data‑center sites under “Stargate,” lifting planned capacity to ~7 GW and >$400B in build over three years—putting the group on track to secure the full $500B / 10‑GW commitment by year‑end. The move landed a day after Nvidia and OpenAI signed a letter of intent to deploy 10 GW of NVIDIA systems, with NVDA intending to invest up to $100B as capacity comes online.

Details:

📍 Where & how big: New sites: Shackelford County, TX; Doña Ana County, NM; an undisclosed Midwest location; Lordstown, OH; Milam County, TX. With the Abilene flagship and CoreWeave projects, Stargate now plans ~7 GW and >$400B over three years; the group says it’s on pace to close $500B / 10 GW by YE‑2025. Oracle‑built sites plus a potential +600 MW Abilene expansion can deliver >5.5 GW and ~25,000 onsite jobs.

🧰 Hardware already flowing: A portion of Abilene is running on Oracle Cloud Infrastructure, after OCI delivered the first NVIDIA GB200 racks in June; OpenAI says it has already started early training and inference on this new capacity.

🤝 SoftBank’s two fast builds: Lordstown, OH broke ground and targets operations next year, while Milam County, TX (with SB Energy) is designed for rapid, powered build‑out. Together, SoftBank’s two sites can scale to ~1.5 GW in ~18 months.

💸 The NVDA tie‑in (Sept 22): OpenAI–Nvidia LOI to deploy 10 GW of NVIDIA systems and NVDA’s intent to invest up to $100B progressively as each GW is deployed; the first GW aims to come online in 2H26 on Nvidia’s Vera Rubin platform. Jensen Huang (Nvidia CEO) called it the “next leap forward.”

🧷 Financing stack: OpenAI is exploring debt to lease chips and leveraging the Nvidia deal to lower financing costs, per financial press. That helps de‑risk capex while locking in supply.

🔌 Power is the bottleneck: Oracle+OpenAI say their 5.5 GW tranche alone equals roughly double San Francisco’s electricity use, underscoring why power and grid interconnects—not just chips—will gate rollout speed.

🗣️ Voices: Sam Altman (OpenAI CEO) says “compute is the key to ensuring everyone can benefit from AI.” Clay Magouyrk (Oracle executive who leads OCI) touts “reliable, scalable” infrastructure that can meet demand. Masayoshi Son (SoftBank Chairman & CEO) frames Stargate as paving the way for “a new era” with OpenAI and Arm.

Why AI/Semiconductor Investors Should Care

For NVDA, the back‑to‑back announcements crystallize a multi‑year demand pipeline: 10 GW of NVIDIA systems (millions of GPUs over time) paired with accelerating Stargate capacity supports continued strength in data center GPUs, networking, and software through at least 2026–2027.

For Oracle (NYSE: ORCL), the Abilene ramp and 4.5‑GW July agreement point to a swelling OCI backlog and AI cloud revenue leverage; for SoftBank (TYO: 9984 / SFTBY), fast‑build data‑center design plus SB Energy offers vertical integration and optionality around Arm.

Watch risks: power, permitting, HBM memory, and supply‑chain constraints—and the possibility of overbuild if AI monetization lags the infrastructure curve. Still, the NVDA+OpenAI capital structure and the Stargate site pipeline suggest near‑term visibility to billions in incremental AI capex and an expanding moat for the platforms that can ship hardware and power it.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] Micron’s Record FY25: Data Center Mix Lifts Margins Above 50%

Date of Event: September 23, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

Micron Technology (Nasdaq: MU) closed fiscal 2025 with record results and a stronger-than-expected fourth quarter, powered by artificial intelligence (AI) demand in the data center. Fiscal Q4 revenue rose to $11.32 billion (up 22% sequentially and 46% year over year), non‑GAAP gross margin expanded to 45.7%, and non‑GAAP diluted EPS reached $3.03. For the full year, Micron delivered $37.38 billion in revenue (up 49%), 40.9% non‑GAAP gross margin, and $8.29 in non‑GAAP EPS. Operating cash flow hit $5.73 billion in Q4 and $17.53 billion for FY25.

Management guided to FQ1‑26 revenue of $12.5 billion ±$300 million, with non‑GAAP gross margin of 51.5% ±1.0% and non‑GAAP EPS of $3.75 ±$0.15—implying roughly $1.2 billion of sequential revenue growth and crossing the 50% gross‑margin threshold. “Micron closed out a record‑breaking fiscal year with exceptional Q4 performance,” CEO Sanjay Mehrotra said, adding that as “the only U.S.-based memory manufacturer, Micron is uniquely positioned to capitalize on the AI opportunity ahead.”

Data center momentum set the tone: management said data center revenue represented 56% of company sales in FY25 with 52% gross margin, while high‑bandwidth memory (HBM) revenue reached nearly $2 billion in Q4 (an ~$8 billion annualized run rate). The company also announced a $0.115 quarterly dividend payable October 21, 2025.

Growth Opportunities

Scale in AI infrastructure. Micron sees an expanding AI build‑out across training and inference, with tight industry dynamic random‑access memory (DRAM) supply and broadening demand beyond accelerators into traditional servers. Management raised its view of calendar 2025 server unit growth to about 10% (from mid‑single digits), noting traditional servers should grow mid‑single digits as AI agents trigger more conventional workloads.