No Revenue Hole Here: Marvell Accelerates, CoreWeave Deepens OpenAI Ties, Cipher Lands Mega Google-Backed HPC Deal

Welcome, AI & Semiconductor Investors,

Marvell silenced skeptics this week, forecasting sustained growth with optics and switching businesses expected to outpace hyperscaler CapEx, ensuring there’s “no revenue hole” in sight for 2026. Meanwhile, CoreWeave and OpenAI inked their third massive deal this year, bringing total commitments to a stunning $22.4 billion, highlighting relentless AI training demand. And don’t sleep on Cipher Mining—its $3 billion Google-backed pivot from Bitcoin mining to HPC colocation sets a fresh benchmark for AI infrastructure.— Let’s Chip In.

What The Chip Happened?

🚦 “No Hole” in 2026: Optics & Switches Keep Marvell’s AI Engine Running

🚀 CoreWeave + OpenAI ink another $6.5B for next‑gen model training

🤝Cipher Mining: Google‑backed $3B AI hosting deal cements HPC pivot

[Marvell’s AI Engine: No Hole in 2026, Optics and Switch Surge]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

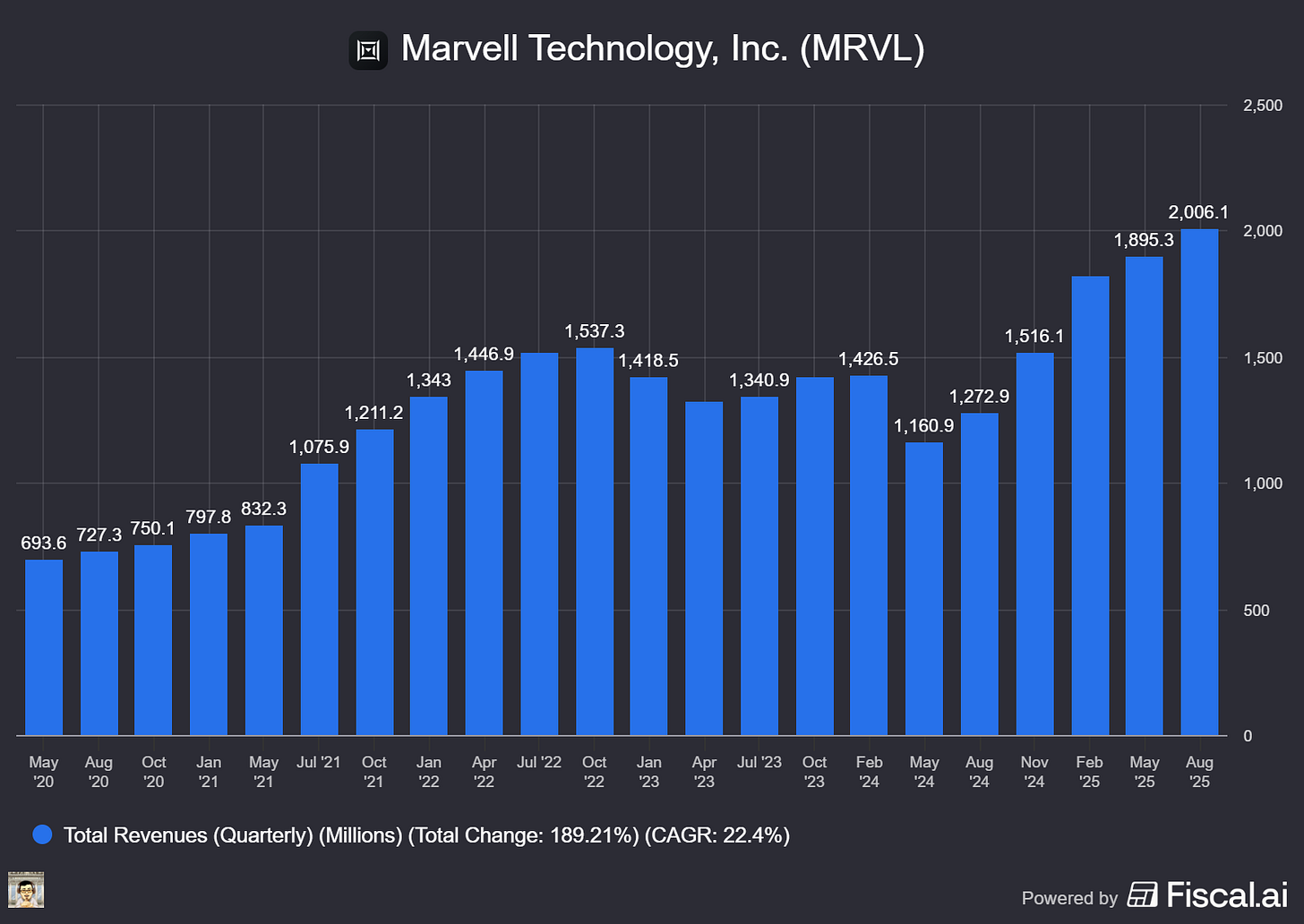

Marvell Technology (NASDAQ: MRVL)

🚦 “No Hole” in 2026: Optics & Switches Keep Marvell’s AI Engine Running

What The Chip: On September 24, 2025, CEO Matt Murphy told investors on a JPMorgan-hosted special call that Marvell “does not see a revenue hole next year” in its custom AI silicon at its lead cloud customer (Marvell signed a 5‑year agreement with AWS last December). Management set a floor for calendar 2026 data center growth by indexing to ~18% hyperscaler CapEx, with optics and switching poised to outgrow that baseline.

Details:

⚙️ Custom AI silicon—no air pocket. Murphy: “We do not see a revenue hole next year… we expect growth.” Marvell pegs its custom business at ~$1.5B this year (about 2× YoY) and, as a base case, plans for ’26 growth in line with ~18% CapEx. Any generational transitions at the lead socket are “comprehended.”

🤝 AWS partnership is broad—and alive. The 5‑year deal (announced last December) spans networking, custom AI silicon, and deeper EDA collaboration. Murphy said the partnership is “going extremely well,” with multiple programs in production and active collaboration on the customer’s custom AI road map.

🧩 XPU attach = second engine. Beyond full XPUs, Marvell is winning attach chips (custom NICs/SmartNICs, CXL-based memory expansion, and accelerator/security offload). These use Marvell IP and can sit beside anyone’s XPU. Management now sees bigger LTVs—some attach programs could reach ~$1B lifetime.

📚 Pipeline momentum since June AI Day. Marvell’s 18 multigenerational wins grew to 20+; the team has already closed ~10% of its $75B tracked funnel since June (i.e., ~$7.5B of LTV). Most of these wins contribute from 2028 onward, including one incremental XPU at an “emerging hyperscaler.”

🔭 Growth should accelerate in ’27–’28. Expect additional custom XPU ramps, rising XPU attach, more meaningful 1.6T optics, and 800G ZR. Murphy reiterated the company’s ambition toward ~20% data center share by 2028 and said confidence has increased since June.

🔌 Optics franchise—still the crown jewel. Data center optics is ~50% of Marvell’s DC revenue and has outgrown CapEx for years. 800G is “very strong” for ’26 and 1.6T is shipping now. Since acquiring Inphi, Marvell says its DSP business has grown ~5×, and DCI (long‑haul/coherent) has also grown ~5×. Murphy: “Do not count out this optics business.” (Layman’s terms: PAM signaling pushes short‑reach speeds inside the data center; coherent optics carry traffic across campuses/metro.)

🧠 Scale‑up inside the rack is a new leg. As servers move from NRZ to PAM signaling, Marvell’s SerDes strengths matter. AECs and Ethernet retimers are ramping now (approaching ~$100M this year, with an easy path to double near term), and PCIe retimers are sampling. Marvell is also investing in scale‑up switching (rack‑level fabrics), engaged in UALink and Ethernet efforts, and emphasizes in‑house switch + SerDes IP as a differentiator.

🧭 Switching ramps hard. After acquiring Innovium (2021), Marvell took 12.8T (Teralynx 7) to high volume, achieved first‑pass silicon at 51.2T (Teralynx 10), and is developing 100T. Revenue has already 2× the original ~$150M baseline; as 51.2T ramps, management said the switch business could approach ~$0.5B over the next year+.

💾 Storage back to baseline—AI is a call option. Data‑center storage has recovered to about $200M/quarter. Fiber Channel (on‑prem) is flat to down, but AI‑driven demand for nearline HDD and enterprise SSD could add upside as inference scales and more data sets persist.

📊 Everything grows in ’26 (floor view). Custom grows ~CapEx (~18%); optics grows above CapEx; switching/other DC grows double‑digits; and communications & enterprise (including consumer at ~$300M/yr and industrial at ~$100M/yr) also grows double‑digits.

💸 Capital returns signal conviction. Marvell announced a $1B ASR and has already repurchased $300M this quarter, with a new $5B authorization after exhausting the prior $3B (approved March ’24). Free cash flow is ~$400M/quarter. Proceeds from the automotive divestiture add flexibility for opportunistic M&A—management points to the track record with Inphi, Avera, and Innovium.

Why AI/Semiconductor Investors Should Care

Marvell’s message directly addresses the bear case for 2026: no revenue gap at its lead XPU customer and a diversified engine where optics and switching should outgrow CapEx. The attach strategy monetizes the swelling ecosystem around GPUs—networking, memory, and security offloads that improve TCO for clouds regardless of which GPU/TPU wins.

Risks remain—socket concentration, standards flux (UALink vs. Ethernet vs. PCIe), hyperscaler CapEx sensitivity, and fierce competition (e.g., Broadcom in switching/optics). But with a bigger win funnel, accelerating ’27–’28 catalysts, and stepped‑up capital returns, Marvell’s setup supports sustained AI‑data‑center share gains rather than a one‑socket story.

People mentioned: Matt Murphy (CEO/Chairman), Ashish Saran (SVP IR), Harlan Sur (JPMorgan semiconductor & semi‑cap analyst), Will Chu (heads Marvell’s custom cloud programs).

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

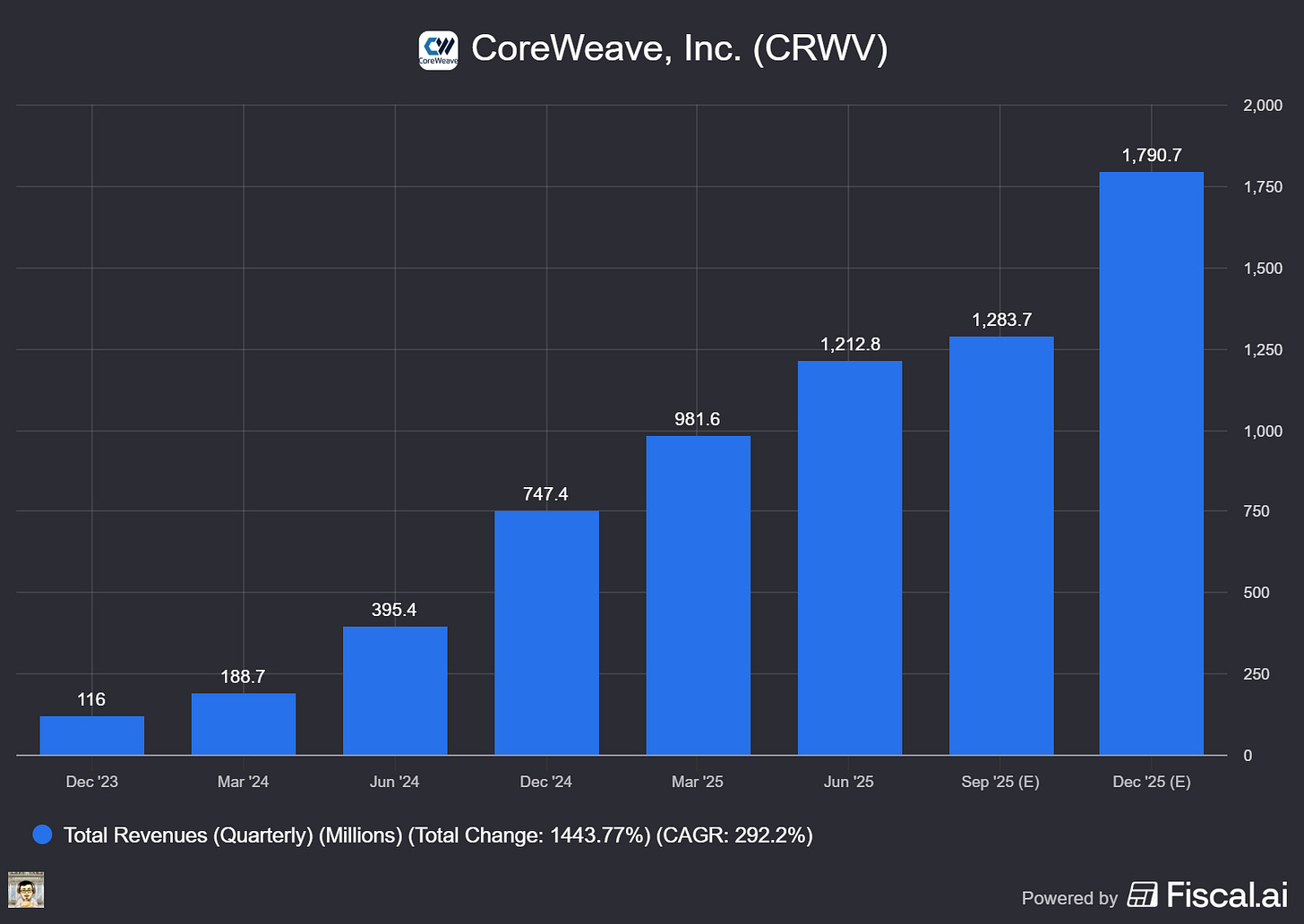

CoreWeave (NASDAQ: CRWV)

🚀 CoreWeave + OpenAI ink another $6.5B for next‑gen model training

What The Chip: On September 25, 2025, CoreWeave said OpenAI expanded its compute contract by up to $6.5B, lifting 2025’s total CoreWeave–OpenAI commitments to ~$22.4B across three deals (March $11.9B, May $4B, and now September $6.5B). The spend underwrites training for OpenAI’s “most advanced next‑generation models.”

Details:

🚨 The headline number & cadence. This is the third expansion in 2025: $11.9B (Mar 10), $4B (May 15), and $6.5B (Sep 25), taking cumulative contract value to ~$22.4B. “Up to” implies usage‑based drawdown rather than fixed take‑or‑pay revenue.

🗣️ What leadership said. CEO Michael Intrator called it “a milestone” that shows “world‑leading innovators” trust CoreWeave to power “the most demanding inference and training workloads at an unmatched pace.” OpenAI’s Peter Hoeschele (VP of Infrastructure & Industrial Compute) said CoreWeave delivers “compute at unmatched speed and scale,” helping advance “the frontier of intelligence.”

🧠 Hardware angle (why CoreWeave keeps winning). CoreWeave has been first to make NVIDIA GB200 NVL72 rack‑scale systems generally available and is running them at scale with customers like IBM, Mistral, and Cohere—exactly the class of infrastructure OpenAI’s next models will crave.

🏗️ Scale context: “Stargate.” Reuters frames these contracts within OpenAI’s broader buildout targeting ~10 GW of compute capacity with newly announced sites via Oracle and SoftBank—evidence that training demand (and power needs) are still ramping.

🇬🇧 Global footprint & platform expansion. This month, CoreWeave pledged £1.5B to expand sustainable AI data‑center capacity in the UK and launched CoreWeave Ventures to invest in AI ecosystem startups—moves that deepen customer lock‑in and deal flow.

🧩 Vertical integration moves. Recent acquisitions—Weights & Biases (AI dev platform) and OpenPipe (RL/agent training)—tighten the stack from training runs to experiment tracking and reinforcement learning, making CoreWeave’s cloud stickier for labs like OpenAI.

⚠️ Things to watch. (1) Consumption risk: “Up to” language means realized revenue depends on actual GPU hours consumed. (2) Capital & power: multi‑billion‑dollar capex and grid constraints remain gating factors for AI clouds. (3) Ecosystem concentration: OpenAI also builds with Oracle and SoftBank, and Reuters notes intertwined investments (e.g., NVIDIA supply agreements) that could invite scrutiny—and potentially impact margins and capacity planning.

Why AI/Semiconductor Investors Should Care

CoreWeave’s additional $6.5B with OpenAI signals durable training and inference demand for NVIDIA Blackwell‑class systems and high‑bandwidth networking—supportive for upstream suppliers (NVDA, switch fabrics, liquid cooling) and for CoreWeave’s utilization and pricing power.

The flip side: consumption‑based contracts and power/financing bottlenecks introduce execution risk if model launches slip or if supply loosens and pricing normalizes. Net‑net, this deal fortifies CoreWeave’s backlog while reinforcing the industry narrative that AI compute—and the chips enabling it—remain the scarce asset through 2026.

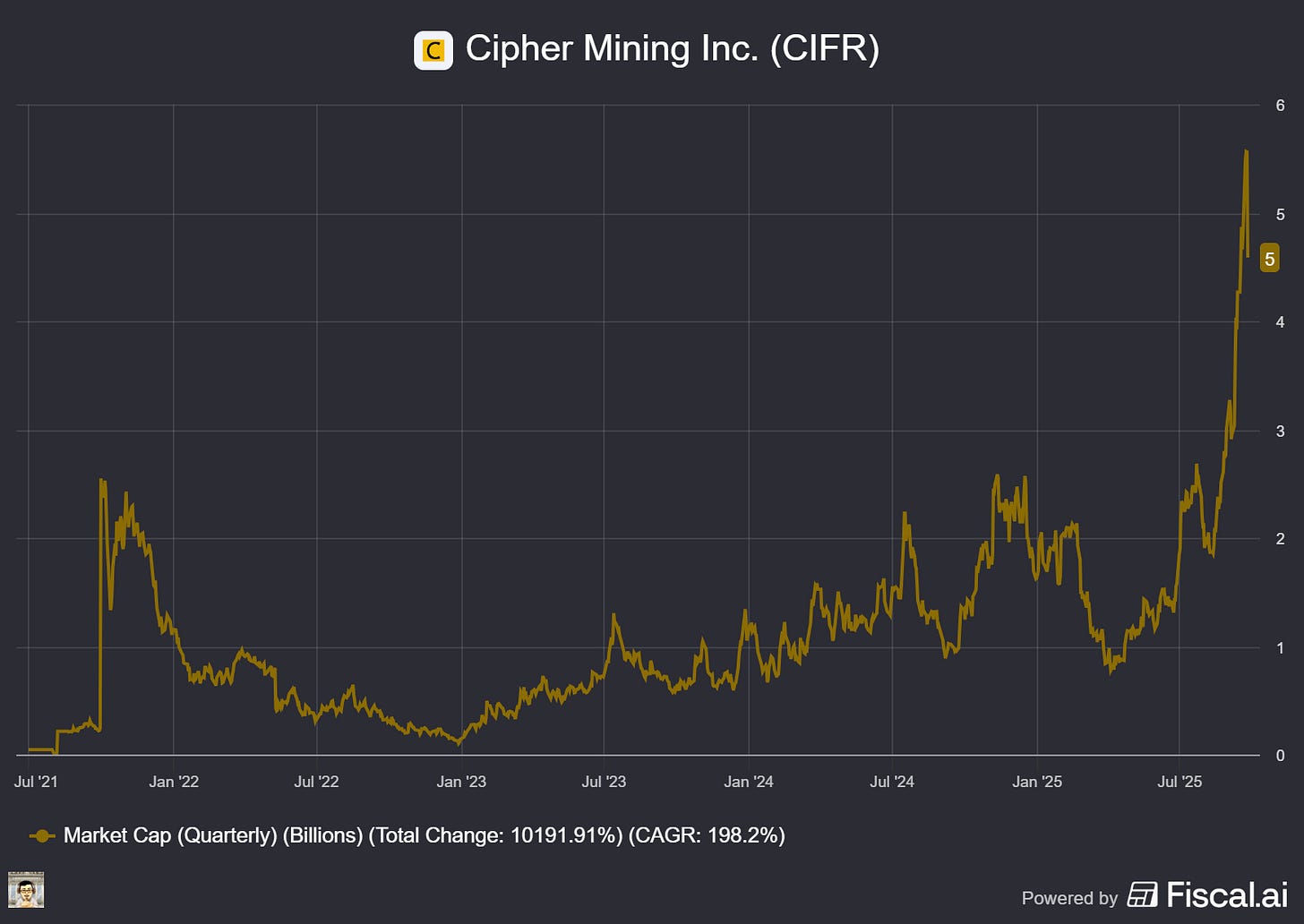

Cipher Mining (NASDAQ: CIFR)

🤝Cipher Mining: Google‑backed $3B AI hosting deal cements HPC pivot

What The Chip: On September 25, 2025, Cipher signed a 10‑year, 168 MW high‑performance computing (HPC) colocation agreement with Fluidstack, locking in ~$3B of contracted revenue; two 5‑year extensions could lift it to ~$7B. Google will backstop $1.4B of Fluidstack’s lease obligations and receive warrants equal to ~5.4% pro forma equity in Cipher.

Details:

🧠 What’s being built. Cipher will deliver 168 MW of critical IT load (the power dedicated to servers/GPUs) by September 2026 at its Barber Lake site in Colorado City, TX. The campus supports 244 MW gross today, with ~500 MW of expansion potential across 587 acres—sized for “next‑gen compute.”

💰 Pricing signal (back‑of‑the‑envelope). $3B over 10 years across 168 MW implies ~$1.79M per MW per year, or ~$149 per kW‑month on average (before escalators). If both extensions are exercised (to $7B/20 years), the long‑run average pencils to ~$174 per kW‑month. Our math from disclosed totals.

📈 Profitability guidepost. Cipher targets 80%–85% site NOI margin on a modified gross lease with annual escalators. At the initial term’s implied revenue, that’s ~$240M–$255M of site NOI per year (~$1.43M–$1.52M per MW).

🤝 Google’s role & creditor comfort. Google’s $1.4B backstop improves bankability. Per Cipher’s 8‑K, Google received 24,178,576 penny‑exercise warrants (~5.4% pro forma) and, if Fluidstack defaults, Google may assume the lease or pay a termination fee (with an equity interest in the project company). The warrant terms also include a $430M value top‑up mechanism before the exercise window.

🏦 How it’s financed. Same day, Cipher priced $1.1B of 0.00% convertible senior notes due 2031 (upsized from $800M). Initial conversion $16.03 (a 37.5% premium to $11.66), with ~68.6M shares underlying (or ~81.1M if the $200M option is exercised). A capped call raises the effective anti‑dilution shield to $23.32. Net proceeds ~$1.08B, primarily for Barber Lake and the 2.4 GW HPC pipeline.

🧩 Comparable pricing check. TeraWulf’s August Fluidstack contracts (Google‑backstopped) implied ~$1.85M per MW per year—very close to Cipher’s ~$1.79M, suggesting early market clearing levels for AI colocation megadeals.

🗣️ Management’s tone. CEO Tyler Page (Cipher) called the deal “transformative” and said it reinforces HPC momentum; César Maklary, Fluidstack co‑founder & president, said Fluidstack “understands what it takes to deliver the compute this moment requires.”

Why AI/Semiconductor Investors Should Care

AI training and inference need power‑dense, liquid‑capable data centers more than GPUs alone. This deal validates Cipher’s shift from bitcoin mining to contracted, enterprise‑grade HPC revenue with Google acting as a quasi‑credit enhancer—improving project finance terms and visibility.

The implied lease rate, NOI margin target, and convert proceeds together suggest Barber Lake can self‑fund expansion if execution holds—but investors should weigh construction/power equipment lead times, potential component cost inflation, and dilution from 24.2M Google warrants plus ~68.6M–81.1M convert shares (partly offset by the capped call). Net: this is a de‑risking step for Cipher and another data point on AI colo pricing in a supply‑constrained market.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] Marvell’s AI Engine: No Hole in 2026, Optics and Switch Surge

Date of Event: September 24, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

Marvell Technology’s leadership used a special call hosted by JPMorgan to address the biggest investor concern head‑on: whether a revenue gap looms in its lead customer’s custom AI processor (“XPU”) program next year. Chief Executive Matt Murphy was unequivocal: “we do not see a revenue hole next year from custom AI silicon from this customer.” He framed 2026 (calendar) around an 18% industry data‑center capital‑expenditure baseline, with Marvell’s custom-silicon business growing at least in line with that, optics growing faster, and the rest of the data‑center portfolio—switching, storage, and security—growing at a double‑digit clip. The company also highlighted new design activity (now 20+ custom XPU and XPU‑attach wins since June), accelerating optical roadmaps (800G strength and a 1.6T ramp already shipping), and a rapidly scaling switch franchise (51.2T in production and 100T under development). Liquidity and capital returns remain robust: a $1 billion accelerated share repurchase (ASR) executed alongside $300 million already bought back this quarter and a new $5 billion authorization, supported by roughly $400 million of free cash flow per quarter.

Context matters: by the host’s framing, Marvell’s data‑center revenue has tripled since calendar 2023 and now represents about 75% of total company revenue; within data center, management pegs ~50% optics, ~25% custom, and ~25% switching/storage/security. Murphy emphasized the breadth: optics leadership, a durable and expanding custom pipeline, and an increasingly material switch and scale‑up connectivity position—rather than a single “socket” defining the story.

Growth Opportunities

Deepening partnerships and a larger funnel. Murphy reiterated the strength of Marvell’s multi‑year cloud partnership announced last December: it spans networking silicon, custom AI silicon, and collaboration on electronic design automation (EDA). The message for 2026: no air pocket in custom revenue, with growth at least tracking the 18% capex baseline. Looking beyond the near term, Marvell disclosed that the custom and attach pipeline has accelerated since June’s AI Day: “The number is not 18 anymore. It’s 20 plus… we’ve now knocked off like 10% of [the] $75 billion funnel” in just a few months. Management positioned most of these wins as layering in from 2028 and beyond, with some potentially arriving sooner depending on customer pull.