NVIDIA's AI Flywheel Roars, CoreWeave’s Gigawatt Gamble, Broadcom’s Billion-Dollar Question

Welcome, AI & Semiconductor Investors,

NVIDIA just unleashed another record-shattering quarter, driven by the explosive adoption of Blackwell, GB300's factory ramp, and ambitious plans for Rubin’s next-gen platform. But is the industry ready for the gigawatt-scale ambitions laid out by CoreWeave’s CFO, and can Broadcom’s upcoming earnings confirm AI's sustainable revenue momentum? — Let’s Chip In

What The Chip Happened?

🚀 NVIDIA: Blackwell Roars, GB300 Ramps, Rubin Next

🔥 CoreWeave at DB Tech: “Gigawatts, Not Megawatts” — CFO maps the scaling playbook

⚡️ AI Engines Humming—Will Broadcom Q4 Guide Clear $17B?

[NVIDIA Q2 FY26: Blackwell Ramp, GB300 Ships, $46.7B Revenue]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

NVIDIA (NASDAQ: NVDA)

🚀 NVIDIA: Blackwell Roars, GB300 Ramps, Rubin Next

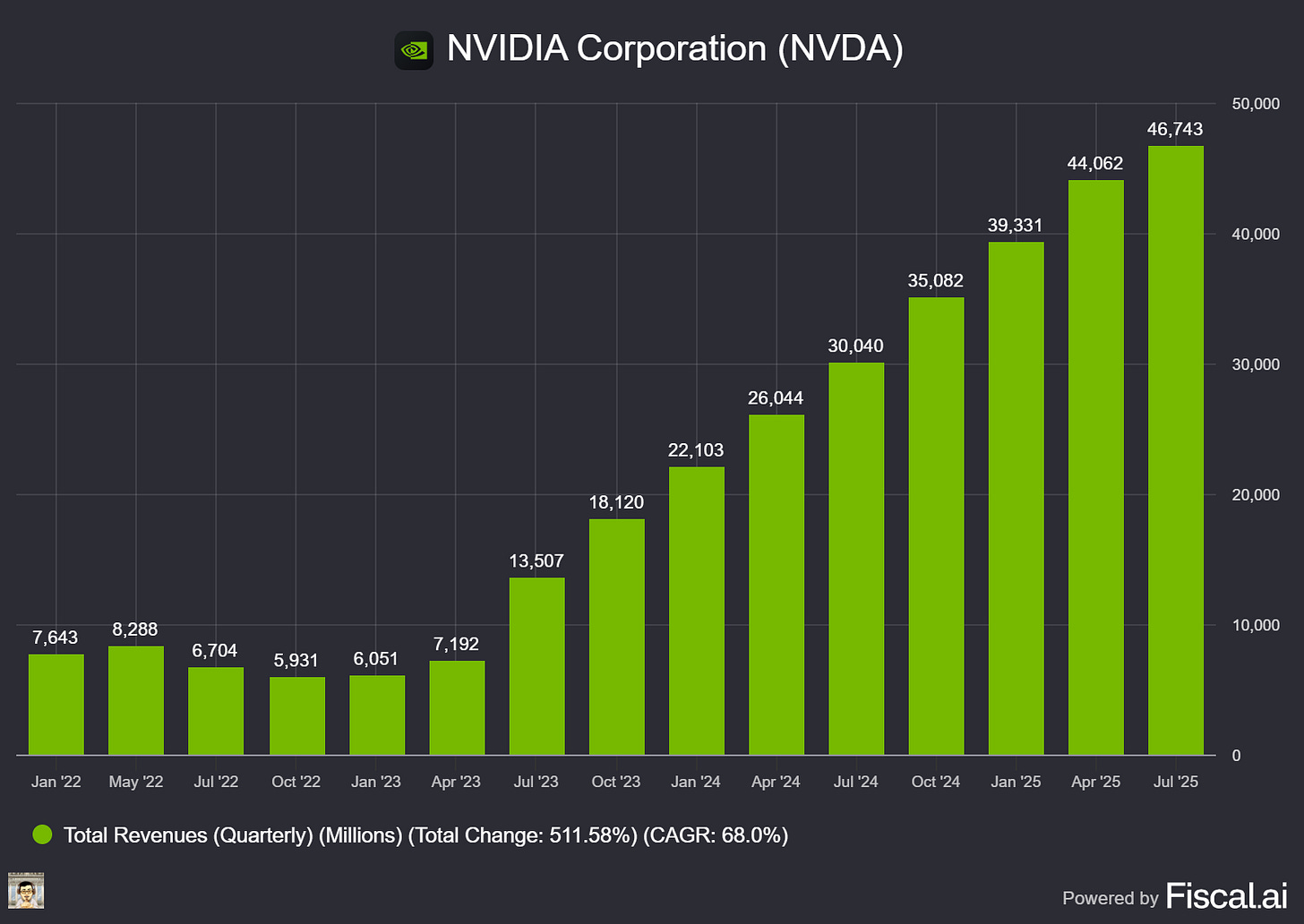

What The Chip: On August 27, 2025, NVIDIA reported record Q2 FY26 revenue of $46.7B and guided Q3 to $54B ±2%, as Blackwell demand, networking, and robotics accelerated—even with China H20 shipments excluded. Management framed a multi‑year $3–$4T AI infrastructure buildout, with GB300 now in production and Rubin already in fab.

Details:

💰 Blowout print, bigger guide. Total revenue hit $46.7B, with Data Center +56% y/y and “sequential growth despite a $4B decline in H20.” Q3 outlook: $54B ±2%; non‑GAAP GM 73.5% ±50 bps; opex (non‑GAAP) ~$4.2B. NVIDIA returned $10B via buybacks/dividends and added a $60B repurchase authorization (on top of $14.7B remaining).

🧠 Blackwell & GB300: ramp at factory pace. Blackwell revenue hit “record levels,” +17% q/q. GB300 began production shipments; late‑July/early‑August factory lines converted; ~1,000 racks/week now, with further acceleration expected in Q3. Management’s monetization example: “$3M in GB200 can generate $30M in token revenue.”

🔌 Networking is a second growth engine. Networking revenue hit a record $7.3B (+46% q/q, +98% y/y) across NVLink, InfiniBand (XDR) and Spectrum‑X Ethernet. Spectrum‑X annualized revenue now >$10B; new Spectrum‑XGS aims to tie multiple AI factories into “giga‑scale” systems (CoreWeave is an initial adopter), potentially doubling GPU‑to‑GPU communication speed. NVLink now delivers 14× the bandwidth of PCIe Gen5.

🌏 China, H20, and policy overhangs. The U.S. began reviewing H20 licenses in late July. Some China customers received licenses, but NVIDIA hasn’t shipped under those licenses and excluded H20 from Q3. Management said the U.S. government has “expressed an expectation” to receive 15% of revenue from licensed H20 sales, though no regulation codifies that. NVIDIA sold ~$650M of H20 in Q2 to a non‑China, unrestricted customer. If geopolitics ease, Q3 could see $2–$5B of H20 shipments. China fell to low‑single‑digit % of Data Center revenue; Singapore billed 22% of Q2 (but >99% of that to U.S. customers).

🧩 Rubin taped out and stays on annual cadence. Chips for Rubin—including the Vera CPU, Rubin GPU, CX9 SuperNIC, NVLink‑144 scale‑up switch, Spectrum‑X scale‑out/scale‑across switch, and a silicon‑photonics processor—are in fab at TSMC, with volume production next year.

🤖 Robotics & RTX PRO broaden the story. Jetson Thor (10× perf/eff vs Orin) is shipping; adopters include Agility Robotics, Amazon Robotics, Boston Dynamics, Caterpillar, Figure, Hexagon, Medtronic, Meta. 2M+ developers and 1,000+ partners build on NVIDIA’s robotics stack. RTX PRO servers are in full production with ~90 adopters (e.g., Hitachi, Lilly, Hyundai, Disney) and could be a multi‑billion‑dollar line.

🎮 Other segments up and to the right. Gaming: $4.3B (record; +14% q/q, +49% y/y), fueled by Blackwell GeForce and the RTX 5060 launch; GeForce NOW gets a major upgrade in September (RTX 5080‑class performance, 5K/120fps, library >4,500). Pro Visualization: $601M (+32% y/y). Automotive: $586M (+69% y/y) as Thor SoC and Drive AV move to production.

⚠️ Costs, inventory, and power. Non‑GAAP GM 72.7% included a 40 bps benefit from releasing prior H20 reserves; ex‑benefit 72.3%. Inventory rose to $15B (from $11B) to support Blackwell/Blackwell Ultra. Opex will grow high‑30s% y/y in FY26 as NVIDIA “accelerates investments.” Management repeatedly highlighted power limits as the binding constraint for AI factories—making perf per watt the core KPI.

Why AI/Semiconductor Investors Should Care

NVIDIA’s quarter shows three flywheels spinning at once: (1) Blackwell/GB300 compute with NVLink‑72 (a rack acts as one giant GPU) that re‑prices inference economics; (2) Networking (NVLink/InfiniBand/Spectrum‑X/XGS) that raises cluster utilization and effectively prints ROI for power‑limited data centers; and (3) Full‑stack expansion into robotics, RTX PRO, sovereign AI (management targets >$20B this year from sovereign programs).

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

CoreWeave (NASDAQ: CRWV)

🚀 CoreWeave at DB Tech: “Gigawatts, Not Megawatts” — CFO maps the scaling playbook

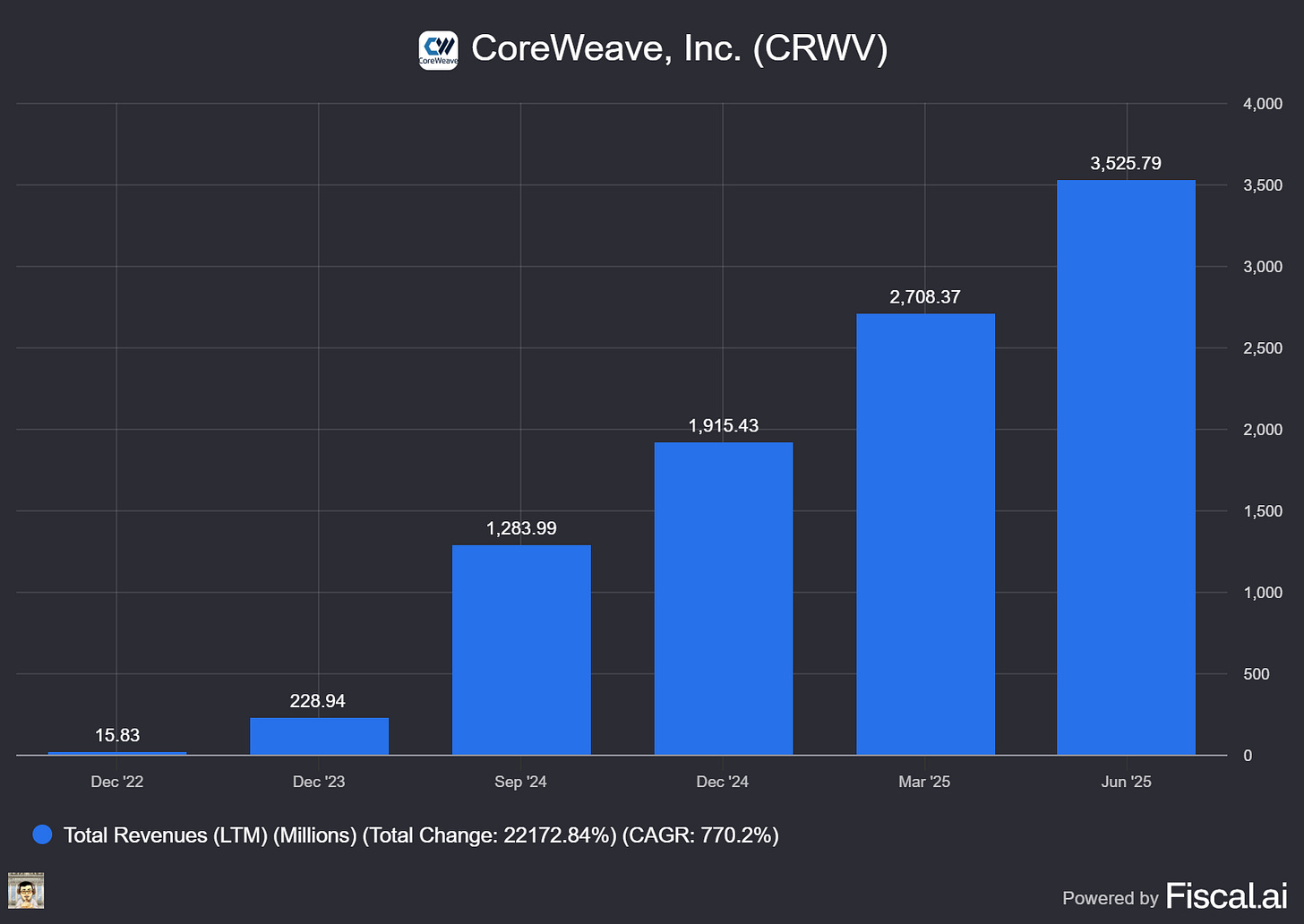

What The Chip: On August 27, 2025, CoreWeave CFO Nitin Agrawal sat down at Deutsche Bank’s 2025 Tech Conference and laid out how the newly public AI cloud is differentiating on tech, contracts, and cost of capital while racing to add power at gigawatt scale. “CoreWeave is the only purpose‑built cloud for AI workloads,” he said, emphasizing first‑to‑market hardware deployments and long‑term, take‑or‑pay contracts that fund growth.

Details:

🏁 Technology leadership & third‑party validation. Agrawal said CoreWeave was “first to deploy” NVIDIA’s Hopper (H100/H200) and first to bring GB200 systems online at scale; in July CoreWeave also announced the first GB300 NVL72 cloud deployment. SemiAnalysis’ new ClusterMAX framework ranked CoreWeave the only “Platinum” AI GPU cloud. Quote: “We’re the first ones to deploy the Blackwell technology, GB200, at scale.”

🧰 Not ‘training vs. inference’—it’s one AI platform. The CFO framed CoreWeave’s stack as fungible across both, citing ramping inference demand from enterprises (IBM, BT Group) and verticals (finance names like Morgan Stanley/Goldman/Jane Street; healthcare via Hippocratic AI; media via Moonvalley). Quote: “We’re not building training or inference infrastructure. We’re building AI infrastructure.”

📜 Revenue visibility: contracts do the heavy lifting. ~98% of Q2 revenue came from committed contracts; contracts are typically 2–5 years, take‑or‑pay with 15–25% prepayments, and CoreWeave still underwrites new business to an ~2.5‑year cash payback on adjusted EBITDA. Quote: “Last quarter, we had 98% of our revenue coming from committed long‑term customer contracts.”

💵 Financing engine keeps getting cheaper. In July, CoreWeave closed a $2.6B DDTL 3.0 at SOFR + 400 bps, completing financing tied to its OpenAI agreement; the CFO added it was funded entirely by top‑tier banks (no private credit). The company also executed two oversubscribed high‑yield offerings this summer.

⚡ Powering up fast. Q2 ended with ~470 MW active power and 2.2 GW contracted; management reiterated a target of >900 MW active by year‑end as conversations shift from 10–100 MW blocks to “gigawatt‑plus” deployments. Quote: “We’re still in a chronically supply‑constrained environment… today the conversations… are more in the gigawatt‑plus scale.”

🏗️ Verticalization to ease bottlenecks & costs. CoreWeave announced a $9B all‑stock deal to acquire Core Scientific (CORZ), which would bring ~1.3 GW of gross power and 1+ GW expansion potential and eliminate >$10B of future lease obligations, with ~$500M run‑rate savings by 2027. Separately, CoreWeave is investing $6B in a new Lancaster, PA greenfield site and pursuing a Kenilworth, NJ campus (JV) targeting up to 250 MW.

🧮 Unit economics & GPU life: bullish case vs. risk. Agrawal said the team is “incredibly comfortable” with 6‑year GPU depreciation and is already re‑contracting Hoppers for inference as early terms roll off—arguing breadth of models/use cases supports residual value across generations. The counter‑risk: rapid NVIDIA cycles (Blackwell ➜ GB300) compress useful life; peers and analysts debate whether depreciation should be shorter.

🚩 Things to watch (bearish skew). Customer concentration remains high (Customer A = 71% of Q2 revenue), and interest expense hit $267M in Q2 with a GAAP net loss of $290.5M as scale ramps. Policy noise adds uncertainty: proposed 100% U.S. chip tariffs and evolving China export terms (15% revenue tithe) could influence hardware costs and demand patterns—even if CoreWeave isn’t a chip vendor.

Why AI/Semiconductor Investors Should Care

CoreWeave’s message at DB was clear: durable capacity + contracts + capital form a flywheel. If management sustains first‑to‑market GPU rollouts and keeps lowering cost of capital (e.g., SOFR+4% DDTL 3.0), the model—high‑visibility, take‑or‑pay revenue against an expanding power footprint—can compound, especially as inference monetization accelerates across enterprises. Offsetting that, investors must weigh leverage/interest burden, customer concentration, and policy/tariff volatility, while stress‑testing useful‑life assumptions amid NVIDIA’s faster cycles. A lot has to go right—but the demand‑supply imbalance and $30.1B backlog suggest real operating leverage if CoreWeave executes.

Broadcom (NASDAQ: AVGO)

⚡️ AI Engines Humming—Will Broadcom Q4 Guide Clear $17B?

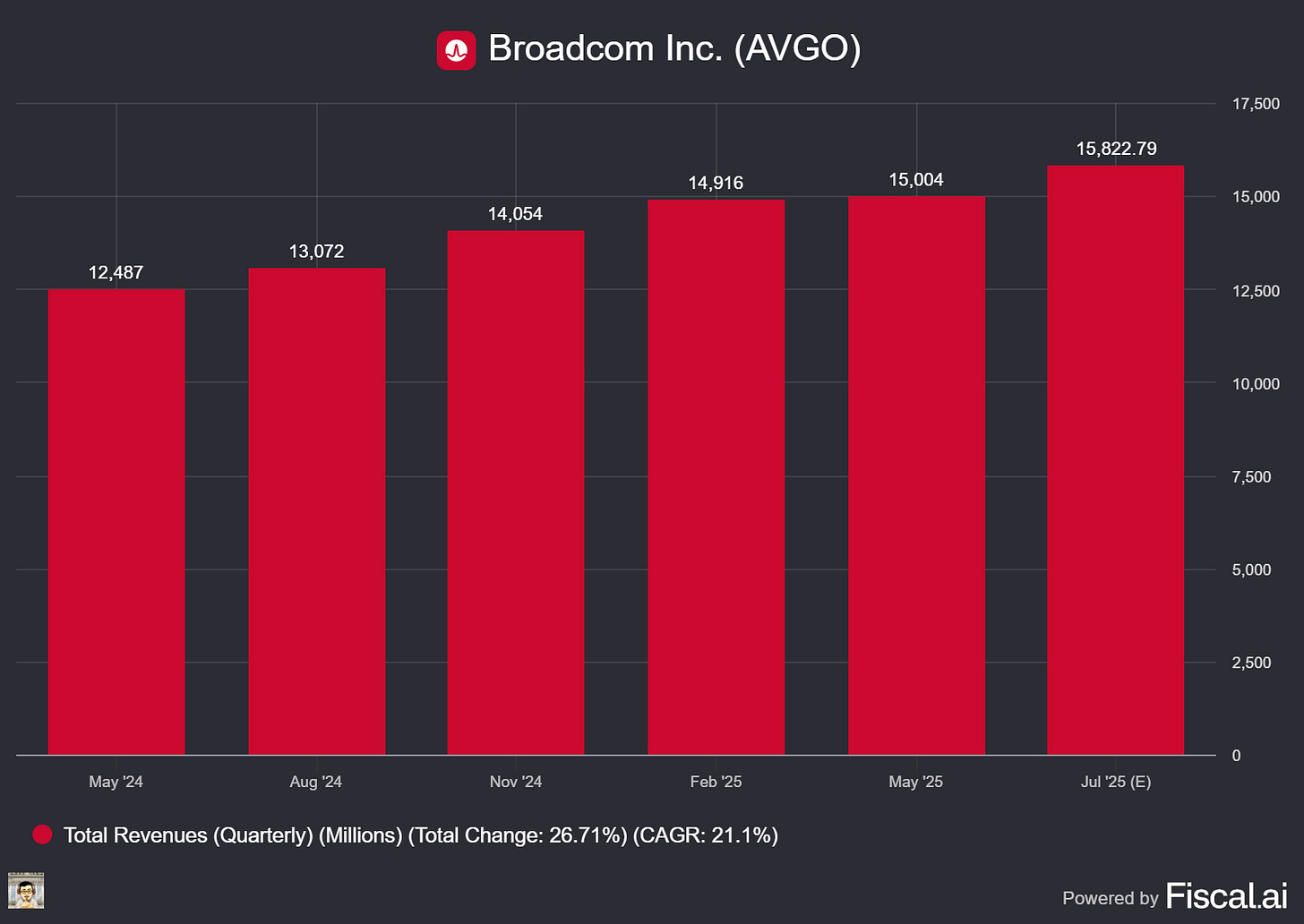

What The Chip: Broadcom reports Q3 FY25 (July‑quarter) after the close on Thursday, September 4, 2025; the call starts 5:00 p.m. ET. Street consensus sits around $15.8B revenue / $1.66 EPS, broadly aligned with management’s prior guide and with little movement in recent weeks.

Details:

📅 Earnings setup. Mgmt guided Q3 revenue ≈ $15.8B and Adjusted EBITDA ≥ 66% of revenue. Consensus matches that, implying about ~21% y/y sales and ~34% y/y EPS growth. A print meaningfully above $15.8B plus a Q4 guide > ~$17B would screen “beat/raise.”

🤖 AI engine = custom compute + Ethernet. In June, CEO Hock Tan said: “We expect growth in AI semiconductor revenue to accelerate to $5.1 billion in Q3, delivering ten consecutive quarters of growth.” Investors will look for color on customers beyond Google/Meta/ByteDance—and any multi‑year visibility.

🕸️ Networking momentum for AI fabrics. Tomahawk 6 (world’s first 102.4 Tb/s Ethernet switch) is shipping; Tomahawk Ultra (ultra‑low latency) followed in July; and Jericho4 launched in August to stitch clusters across data centers. Translation: Broadcom keeps arming Ethernet‑based AI fabrics as an alternative to InfiniBand.

🧰 Software flywheel (VMware). At VMware Explore (Aug 26) Broadcom made VMware Cloud Foundation 9.0 “AI‑native,” announcing VMware Private AI Services as a standard entitlement in VCF 9.0 (entitlement begins Q1 FY26) and deeper NVIDIA integration to simplify private‑AI deployments.

🤝 Canonical partnership. Broadcom and Canonical expanded their collaboration to optimize VCF + Ubuntu for container and AI workloads—aimed at faster, more secure deployments (including air‑gapped environments).

🧩 Street wants a stronger Q4 guide. Early views cluster near ~$17B; Citi is openly looking for “> $17B.” UBS has also turned more constructive, citing a faster Google TPUv6p ramp that could support Broadcom’s AI custom silicon.

🧾 Cash returns & balance. Last quarter Broadcom generated $6.4B FCF, repurchased $4.2B of stock (25.3M shares), and paid a $0.59 quarterly dividend; a steady capital‑return cadence remains part of the setup.

⚠️ Watch the margin mix and VMware noise. Custom AI chips can carry lower gross margin than software; sustaining EBITDA near guidance while AI mix rises will matter. Meanwhile, Broadcom is shifting the VMware Cloud Service Provider program to invite‑only (effective Nov 1, 2025), and CISPE has appealed the EU’s VMware approval—both are headline risks for partner churn and software growth optics.

Why AI/Semiconductor Investors Should Care

If Broadcom beats Q3 and guides Q4 > ~$17B, it signals the AI pipeline (custom ASIC/XPU plus Ethernet networking) is converting into sustained revenue growth into FY26, not just a one‑off spike. The counter‑risk: mix shift from high‑margin software to lower‑margin custom silicon could pressure margins, while VMware channel/regulatory headlines may inject volatility into the software narrative. Net‑net, the AI run‑rate and Q4 guide will likely drive the print’s stock reaction far more than the in‑line $15.8B baseline.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] NVIDIA Q2 FY26: Blackwell Ramp, GB300 Ships, $46.7B Revenue

Date of Event: August 27, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

NVIDIA delivered second‑quarter fiscal 2026 revenue of $46.7 billion, outperforming its outlook as growth continued “across all market platforms,” according to CFO Colette Kress. Data Center remained the engine, up 56% year over year, and still grew sequentially despite a $4 billion decline in H20 sales. Networking set a record at $7.3 billion (up 46% sequentially, 98% year over year). Gross margin reached 72.4% GAAP and 72.7% non‑GAAP, including a 40 bps benefit from releasing previously reserved H20 inventory; excluding that, non‑GAAP gross margin would have been 72.3%. Management emphasized the ramp of its Blackwell platform, the start of GB300 production shipments, and the company’s move to an annual product cadence with Rubin now “in fab.” CEO Jensen Huang framed the runway succinctly: “We see $3 trillion to $4 trillion in AI infrastructure spend by the end of the decade.”

Growth Opportunities

Rack‑scale Blackwell and the GB300 ramp. NVIDIA said GB300 production began in Q2 and that factory builds converted in late July and early August to support the ramp. The company is now producing ~1,000 racks per week, with output “expected to accelerate even further throughout the third quarter.” Management expects “widespread market availability in the second half of the year,” aided by partners such as CoreWeave, which is preparing a GB300 instance and is already seeing ~10x higher inference performance on reasoning models versus H100.