NVIDIA’s Rubin Drama Unfolds, Qualcomm’s Auto Surge Accelerates, CoreWeave’s Margins Hit the Skids

Welcome, AI & Semiconductor Investors,

Are NVIDIA’s Rubin delays fact or fiction? Meanwhile, Qualcomm confidently cruised past its 2026 automotive targets, boasting high visibility and an innovative BMW ADAS collaboration. But it wasn't smooth sailing everywhere. CoreWeave stumbled, losing over 20% after margins collapsed under debt-fueled growth, just days before a crucial IPO lockup ended. The takeaway: AI chip supremacy is heating up, with big gains and bigger risks ahead. — Let’s Chip In

What The Chip Happened?

⚡ Rubin Rumors vs. Reality: NVIDIA Says “On Track”

🚗 Qualcomm: Auto Run-Rate Beats FY26; BMW ADAS Stack Goes Global

📉 Beat on Revenue, Bled on Margins: CoreweaveSinks ~21% Ahead of Lockup

[CoreWeave’s Q2 2025: $1.2B Revenue, $30.1B Backlog, Supply Still Tight]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

NVIDIA (NASDAQ: NVDA) Advanced Micro Device (NASDAQ: AMD)

⚡ Rubin Rumors vs. Reality: NVIDIA Says “On Track”

What The Chip: On Aug 14, 2025, a sell‑side note from Fubon Research (analyst Sherman Shang) claimed NVIDIA’s next‑gen Rubin AI platform was redesigned, implying only “limited volumes” in 2026. NVIDIA denied it on the record to Barron’s: “The report is incorrect. Rubin is on track.”

The Situation Explained:

🕵️♂️ The rumor: Fubon says an initial Rubin version taped out in late June, and NVIDIA is “now redesigning” to better counter AMD’s coming MI450, risking limited 2026 volumes. Treat this as one analyst’s call, not company guidance.

🧯 NVIDIA’s response: The company told Barron’s via email: “The report is incorrect. Rubin is on track.” That’s the only on‑record statement from NVIDIA today.

🗺️ Roadmap still stands: NVIDIA’s cadence remains Blackwell Ultra (GB300) in 2H 2025 → Rubin (Vera–Rubin) in 2H 2026 → Rubin Ultra in 2H 2027, per March GTC disclosures.

📈 What Rubin targets (public/analyst): ~50 PFLOPS (FP4) per GPU, 288 GB HBM4 with 13 TB/s bandwidth; a VR (Vera–Rubin) NVL144 rack aims at 3.6 exa‑FP4 inference and 1.2 exa‑FP8 training—3.3× GB300 NVL72. Rubin Ultra moves to NVL576 (15 exa‑FP4).

🆚 AMD’s 2026 counter (MI400/“MI450”): AMD’s own materials guide to up to 40 PFLOPS FP4 and 432 GB HBM4 (19.6 TB/s), plus ~300 GB/s scale‑out bandwidth. The “Helios” rack pairs 72 MI400 GPUs for ~2.9 exa‑FP4 and ~1.4 exa‑FP8, with ~31 TB of HBM4 and ~260 TB/s scale‑up (UALink). Target: 2026.

🤝 Who’s advising AMD: Lisa Su (AMD CEO) called OpenAI an “early design partner” for MI450; Sam Altman said he’s “extremely excited for the MI450” and praised its “memory architecture…for inference.” That underscores a memory‑heavy design aimed at large‑context inference.

📦 Near‑term demand context: Whatever happens to Rubin’s exact cutovers, demand for GB200/GB300 remains supply‑constrained. NVIDIA‑backed CoreWeave described demand as “insatiable” for the next several quarters.

⚠️ Investor watch‑outs: If (and only if) Rubin needs a short respin, 2026 shipments could compress into late‑year, nudging some revenue into 2027—but NVIDIA’s on‑record stance is no delay. Separately, 2027 Rubin Ultra systems push 600 kW‑class racks, implying power and capex constraints could be the real gating factor for both vendors.

Why AI/Semiconductor Investors Should Care

Today’s chatter pits one analyst rumor against NVIDIA’s on‑record denial. Base case remains Rubin in 2H 2026 and AMD MI400/“MI450” in 2026. The competitive crux looks like memory capacity/bandwidth (AMD 432 GB HBM4; 19.6 TB/s) versus NVIDIA’s scale‑up fabric and system density (NVLink/NVL)—factors that drive training efficiency, inference latency, and TCO at rack scale. Even if a minor redesign exists, backlog + supply constraints on current GB200/GB300 likely buffer NVDA’s near‑term revenue, while 2026–2027 will showcase whether AMD’s memory‑first rack wins enough share to dent Rubin’s momentum.

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

QUALCOMM Incorporated (NASDAQ: QCOM)

🚗 Qualcomm: Auto Run-Rate Beats FY26; BMW ADAS Stack Goes Global

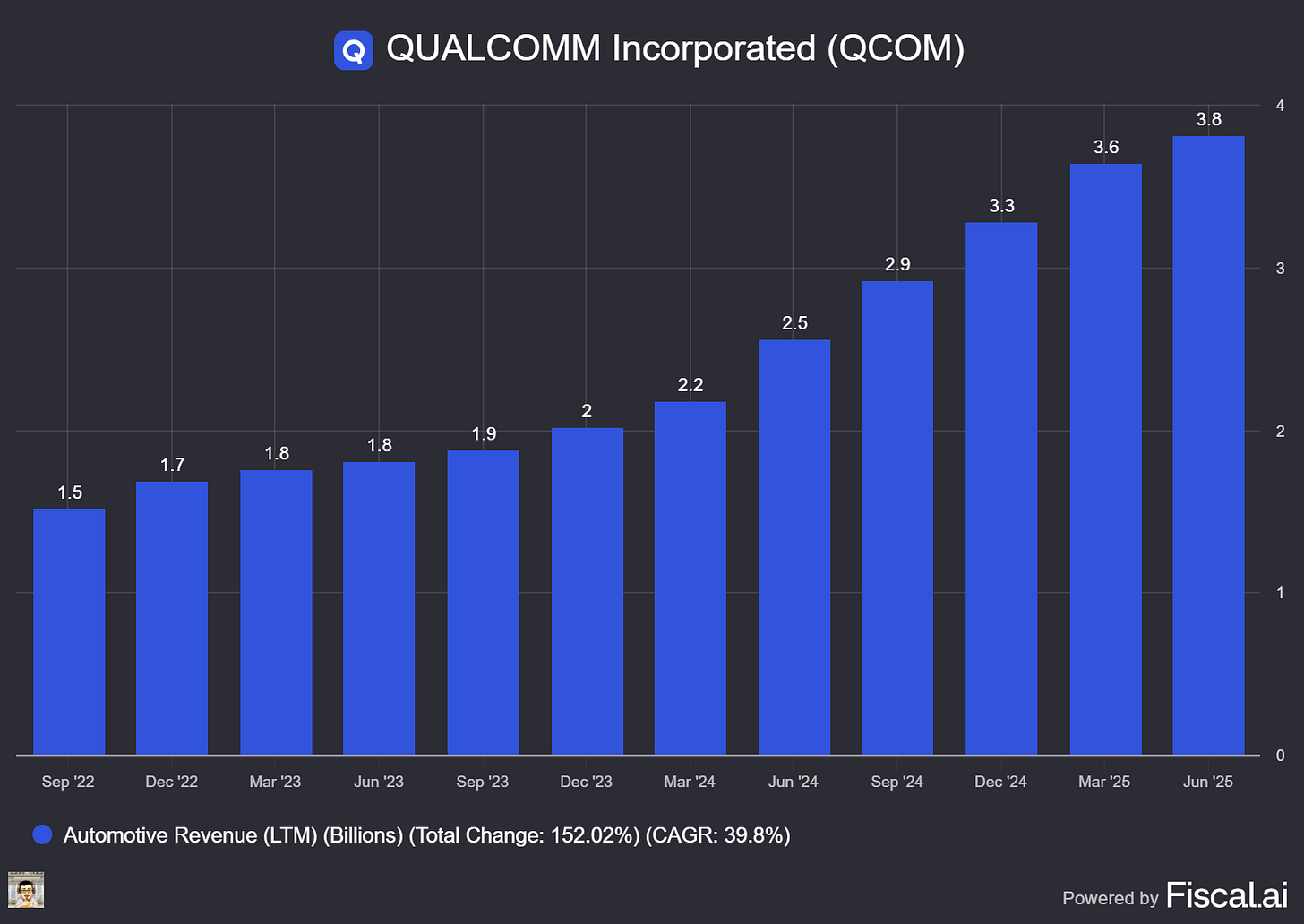

What The Chip: At J.P. Morgan’s Hardware & Semis Access Forum on August 13, 2025, COO/CFO Akash Palkhiwala said Qualcomm’s Auto business is already near its FY26 $4B revenue goal in FY25 and expects to beat it, while reaffirming $8B in FY29 (~20% CAGR) with >80% of the next four years covered by design wins. He highlighted rising digital-cockpit/ADAS content, a jointly built BMW ADAS stack that Qualcomm can resell, on‑device AI across devices, and FY28 as the first revenue year for its data‑center push.

Details:

🚘 Auto ahead of plan, high visibility: “We’ll beat ‘26 target,” Palkhiwala said, adding Qualcomm remains on track for $8B Auto revenue in FY29 (~20% CAGR) and that “greater than 80%” of cumulative auto revenue for the next four years is backed by existing design wins. Qualcomm participates in the fast-growing slices of the car—connectivity, digital cockpit, and ADAS—with management estimating that SAM grows ~15% even if auto units stay flat.

📈 Content per vehicle moving up: Connectivity dollars are steady, but digital cockpit content “has increased by a lot” versus two years ago as OEMs race to modernize displays and software. Agentic AI should further lift content: “Being able to have a conversation with your car…is going to be very critical.”

🧠 BMW milestone & stack monetization: The BMW launch matters because Qualcomm and BMW co‑developed the ADAS software stack and Qualcomm can resell the entire stack to other OEMs: “They get to use all the technology for free…we get to use the entire stack for free to everyone else.” Qualcomm counts ~20 OEMs already using Snapdragon Ride silicon for ADAS and sees this as a platform for robotics (sensor fusion, low power, wireless, vision).

🆚 Competing with NVIDIA/Mobileye in ADAS: With Snapdragon Ride Flex, OEMs can run cockpit + ADAS on the same SoC in lower tiers, then scale by adding the same chip (software‑compatible) or step up to a higher‑end ADAS part for premium trims. Palkhiwala: “We have the best SoC in the industry,” and a white paper on Qualcomm’s automotive/ADAS position hit today.

🏭 Data center: CPU + inference, first rev in FY28: Qualcomm will scale its custom ARM CPU (born from the NUVIA acquisition) and bring its low‑power NPU know‑how to inference workloads. It’s engaged with one large hyperscaler, has an MOU with a smaller customer, and will support NVLink interoperability. The Alphawave deal (for high‑speed connectivity IP) is expected to close in 1Q26; management says the strategy doesn’t depend on the acquisition given existing assets and licensing alternatives.

🧪 IoT & Industrial Edge AI: Four engines drive IoT—XR/wearables, PCs, industrial, networking. On wearables, Qualcomm demoed a ~1B‑parameter model running on glasses, positioning “personal agentic AI” devices (e.g., glasses) as a new category not in current forecasts. In factories, cameras and sensors shift from microcontrollers to edge AI over the next decade—a “next 10‑year trend.”

💻 Windows on Snapdragon: Step 1 was to establish performance leadership; “We think we’ve achieved that” for Windows PCs, with next chips coming in September 2025. Qualcomm estimates ~9% share (U.S./W. Europe, $500+ segment it currently targets) and will expand later into lower price bands, but priority remains the dollar SAM.

📱 Handsets, tariffs & margins: September‑quarter guidance calls for mid‑single‑digit YoY growth, driven by Android: richer premium content, mix shift up, and share gains vs. Apple in China. Xiaomi will launch first with Qualcomm’s next premium phone SoC, which ships earlier than last year. Management is not seeing tariff‑driven pull‑forward (recent builds align with XR launches like Ray‑Ban and Oakley). QCT ~30% operating margins look sustainable over the multi‑year view as diversification (Auto/IoT/PC) more than offsets the Apple modem wind‑down; Alphawave and data‑center efforts add OpEx but come with reprioritization.

Why AI/Semiconductor Investors Should Care

Auto is shifting from cyclical volumes to software‑defined content, and Qualcomm sits where dollars grow fastest—cockpit, connectivity, ADAS—with rare revenue visibility (>80% covered by design wins) and a path to $8B in FY29. The BMW stack creates a software monetization layer on top of silicon, while Ride Flex gives OEMs a cost‑efficient ADAS ramp across trims—both strong moats versus rivals. Near‑term drivers (Android premium mix, early PC wins, industrial/edge AI) support margin durability, but investors should watch ADAS stack adoption at scale, execution/timing in data center (first rev FY28), competitive responses from NVIDIA/Mobileye, and any 2025 tariff aftershocks that could distort OEM build plans—even though management says it isn’t seeing that today.

CoreWeave (NASDAQ: CRWV)

📉 Beat on Revenue, Bled on Margins: CRWV Sinks ~21% Ahead of Lockup

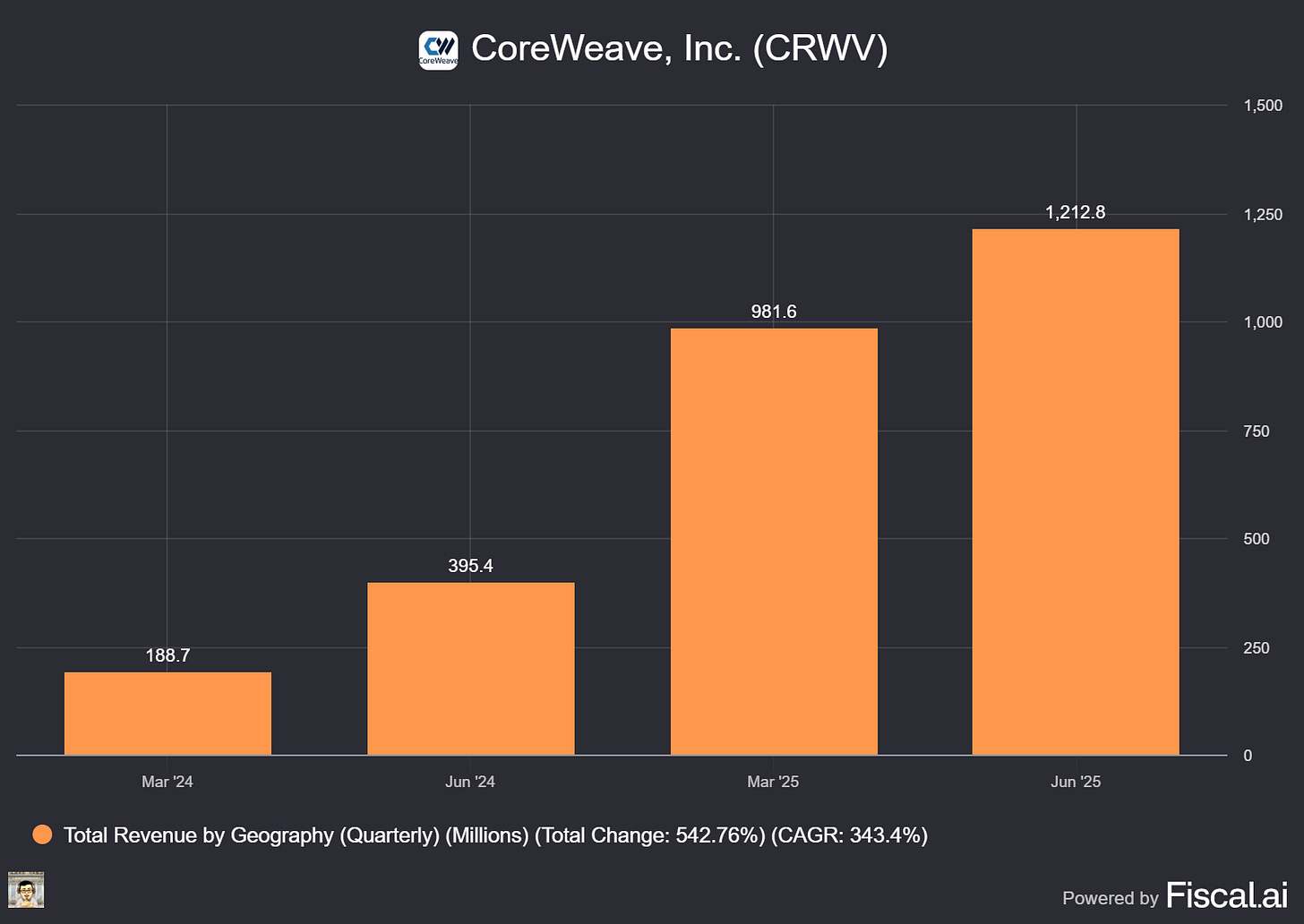

What The Chip: On Aug 12, 2025, CoreWeave reported Q2 revenue of $1.213B (+207% y/y), raised full‑year revenue guidance, but posted a wider‑than‑expected loss and sharply lower operating margins. On Aug 13, shares fell -20.83% into the lockup expiration window.

Details:

📊 Stock move: Closed $117.76 (-$30.99, -20.83%) on Aug 13 after opening $132.98, trading between $134.50 / $117.60. In the after‑hours session, trades clustered around $116–$119. For context, CRWV hit $187 intraday on Jun 20.

🚀 Topline outperformed: $1.213B vs. $1.082B consensus on accelerating AI demand (fifth straight quarter of triple‑digit growth). Management attributed strength to both training and inference workloads.

🔻 Profitability under pressure: Net loss $290.5M (–$0.60/sh); GAAP operating margin fell to 2% from 20% a year ago as operating expenses surged to $1.19B (from $317.7M). CEO Michael Intrator called out scaling and cost mix as the company races to add capacity.

⚡ Capacity & demand picture: Ending Q2 with ~470 MW active power and 2.2 GW contracted; on track for >900 MW active by year‑end. Intrator said, “inference is the monetization of AI,” and flagged “powered shells” as the choke point for supply. (Intrator is CoreWeave’s co‑founder/CEO.)

💸 Debt & interest bite: Q2 interest expense $267M (vs. $67M last year) reflecting heavy debt funding of build‑outs. A Reuters note echoed investor concerns that profits don’t yet cover obligations; one D.A. Davidson line read: “CoreWeave does not currently generate enough profit to pay all its debt holders, certainly not equity holders.” (D.A. Davidson’s Gil Luria is a sell‑side analyst.)

🔭 Guidance skews back‑half: Q3 revenue $1.26–$1.30B; Adj. op income $160–$190M; Q3 interest expense $350–$390M. FY25 revenue raised to $5.15–$5.35B; CapEx still $20–$23B, heavily Q4‑weighted as power and GPUs come online. (CFO Nitin Agrawal.)

🔒 Lockup overhang: A 139‑day lockup expires Aug 14, and ~83% of Class A shares become eligible to trade—fueling incremental supply fears into a weak tape.

🧩 Strategic moves: The company is integrating Weights & Biases (observability/inference services) and pursuing an all‑stock ~$9B acquisition of Core Scientific to verticalize infrastructure—moves aimed at lowering cost of capital and improving control over capacity. Some Core Scientific holders have signaled opposition.

Why AI/Semiconductor Investors Should Care

The print reinforces a key theme: AI compute demand remains structurally supply‑constrained (power shells, grid, transformers), and CoreWeave sits at the center of that build‑out. But this is a levered capacity story: equity returns now hinge on management converting $30B+ backlog into 900MW+ deployed capacity by Q4 while lowering borrowing costs and defending margins amid surging interest expense and CapEx. Near‑term, lockup supply and margin compression keep volatility elevated; medium‑term, success in verticalization and inference monetization could reset the trajectory.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] CoreWeave’s Q2 2025: $1.2B Revenue, $30.1B Backlog, Supply Still Tight

Date of Event: August 12, 2025 (Q2 FY2025 Earnings Call)

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

CoreWeave reported second‑quarter revenue of $1.2 billion, up 207% year over year, marking its first billion‑dollar quarter. The company delivered $200 million in adjusted operating income (16% margin) and $753 million in adjusted EBITDA (62% margin). Management underscored two themes: intensifying demand for training and inference on its purpose‑built AI cloud, and a persistent industry supply‑demand imbalance centered on power‑ready data center shells.

CEO Michael Intrator framed the quarter as a scale milestone: “This marks the first quarter in which we reached both $1 billion in revenue and $200 million of adjusted operating income.” CoreWeave ended Q2 with ~470 MW of active power and increased total contracted power by ~600 MW to 2.2 GW, guiding to >900 MW active by year‑end. Revenue backlog rose to $30.1 billion, up $4 billion sequentially and nearly doubled year‑to‑date, including a previously announced $4 billion expansion with OpenAI.

CFO Nitin Agrawal highlighted balance‑sheet progress, including $6.4 billion raised across two high‑yield offerings and a delayed‑draw term loan (DDTL). A new $2.6 billion DDTL priced at SOFR + 400 bps—a “900 basis point decrease” versus the non‑investment‑grade portion of the prior facility—completes financing for the $11.9 billion OpenAI contract.

Guidance reflects continued growth with near‑term margin friction from capacity coming online before revenue: Q3 revenue $1.26–$1.30 billion and adjusted operating income $160–$190 million; full‑year revenue raised to $5.15–$5.35 billion, while full‑year adjusted operating income remains $800–$830 million.

Growth Opportunities

Inference at scale. CoreWeave continues to design clusters to be fungible across training and inference. Intrator emphasized the shift: “Inference is the monetization of artificial intelligence.” Management is seeing a “massive increase” in inference workloads, including chain‑of‑reasoning use cases that consume steady power and favor CoreWeave’s fleet reliability and scheduling.