TSMC’s AI Engine Accelerates, AWS Stumbles, and Google's Blackwell Power Play

Welcome, AI & Semiconductor Investors,

Did TSMC just solidify its reign over AI chips? With nearly 60% gross margins and record-high AI-driven revenues, the Taiwanese giant is rapidly pulling away from the pack. But while TSMC hums, AWS stumbled, reminding us why single-region cloud dependency is a ticking time bomb. And don’t overlook Google—its new Blackwell-powered G4 VMs just rewrote the playbook for AI inference and digital twins. — Let’s Chip In.

What The Chip Happened?

🚀 TSMC: AI foundry engine hums — Q3 beat, ~60% GM, N2 on deck

☁️ AWS Outage Rocks the Cloud—And Everyone Else’s Boat, Too

⚡️ Google Cloud Powers Up with NVIDIA’s Blackwell GPUs—Say Hello to G4!

[TSMC’s Q3 2025: Record Profits Powered by AI and Advanced Chips]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

TSMC (NYSE: TSM; TWSE: 2330)

🚀 TSMC: AI foundry engine hums — Q3 beat, ~60% GM, N2 on deck

What The Chip: On October 16, 2025, TSMC flexed its silicon muscle, reporting Q3 EPS of NT$17.44 (US$2.92 per ADR) on revenues hitting $33.1 billion, soaring 40.8% year-over-year and rising 10.1% sequentially. The AI gold rush keeps driving explosive growth at the foundry giant, with leading-edge fabs booked solid. Management confidently projects Q4 revenue of $32.2–$33.4 billion and rock-solid margins near 60%.

Details:

📈 Headline numbers & profitability. Revenue NT$989.9B ($33.1B); net income NT$452.3B; gross margin 59.5%, operating margin 50.6%, net margin 45.7%; ROE 37.8%. Cash from ops NT$427B, CapEx NT$287B, implying ~NT$140B FCF; cash & marketable securities NT$2.8T (~$90B). CFO Wendell Huang: “Supported by strong demand for our leading‑edge process technologies.”

🧠 Node mix shows AI tilt. N3 = 23%, N5 = 37%, N7 = 14%; nodes ≤7nm = 74% of wafer revenue. Translation: most sales come from the processes used for AI accelerators and top smartphone chips.

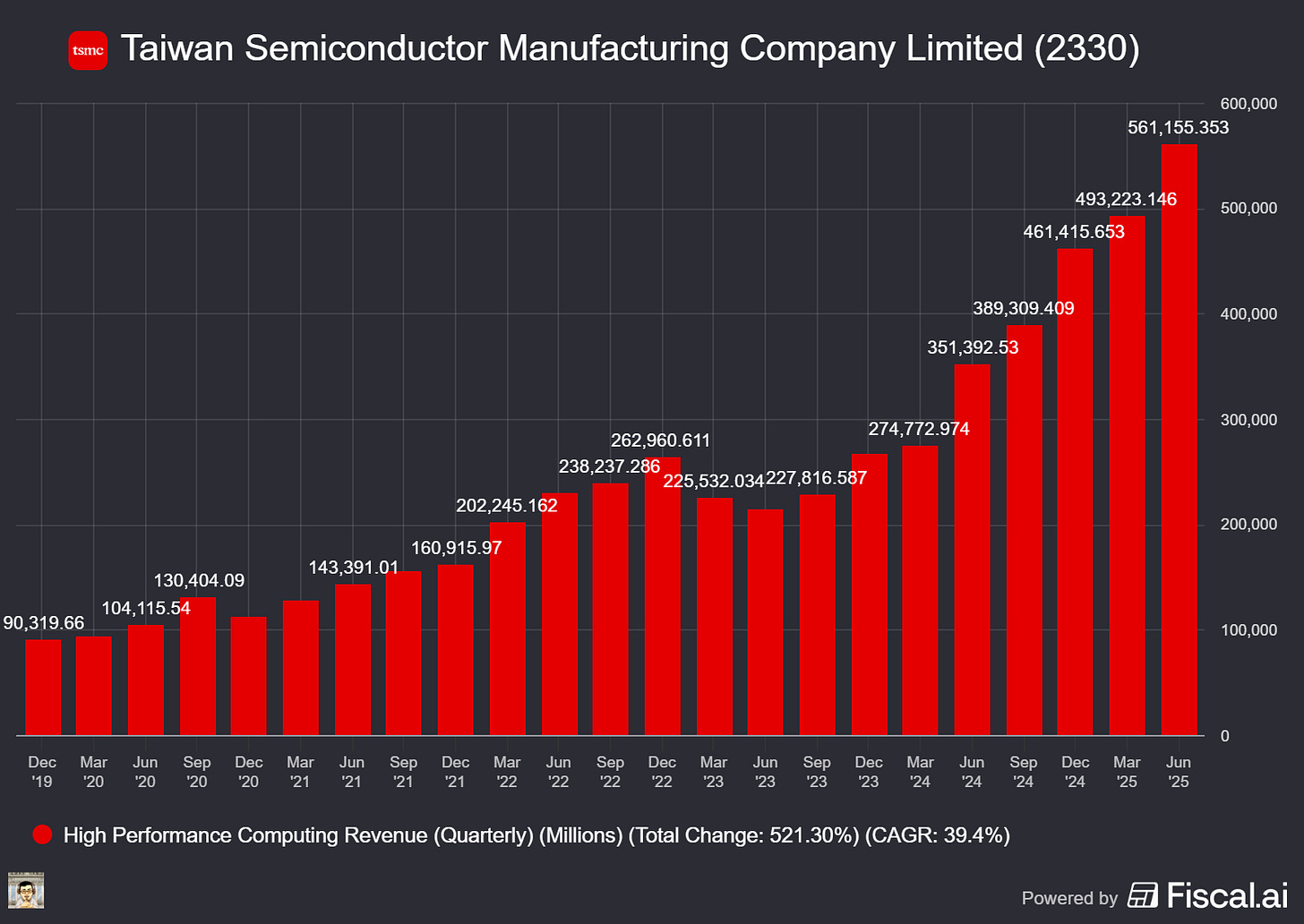

🖥️ Platform mix (Q3). HPC (AI/data center) held 57% of revenue (flat QoQ); Smartphone rose 19% QoQ to 30%; IoT up 20% to 5%; Auto up 18% to 5%; DCE down 20% to 1%.

🎯 Q4 outlook. $32.2–$33.4B revenue (-1% QoQ / +22% YoY midpoint), GM 59–61%, OPM 49–51%. Gross margin should tick ~50 bps higher at midpoint helped by FX; inventory days 74 and A/R days 25 support healthy flow‑through.

🔥 AI demand still “insane.” CEO C.C. Wei said AI demand is stronger than three months ago, adding full‑year 2025 revenue should grow “close to mid‑30s%” in USD. On the GPU vs. ASIC mix: “No differentiation in front of TSMC—we support all types.”

📦 Advanced packaging = bottleneck (and opportunity). CoWoS (2.5D/3D packaging that stitches compute dies to HBM memory) remains tight. TSMC plans two advanced‑packaging fabs in Arizona and is partnering with a major OSAT whose Arizona plant breaks ground earlier. Wei also noted advanced‑packaging revenue is approaching ~10% of total—material and growing.

🧪 Next nodes explained. N2 (gate‑all‑around nanosheets for better speed/power) enters volume later this quarter; N2P adds efficiency in 2H’26; A16 with Super Power Rail (backside power delivery that frees front‑side routing for higher density) targets 2H’26. These nodes matter because they cut energy per computation, a key cost in AI training/inference.

🌍 Costs, FX & policy risk. Overseas‑fab dilution trimmed to ~2% in 2H’25 (vs. prior 2–3%); over the next several years expect 2–3% early, widening to 3–4% later. Every 1% USD/TWD move shifts GM by ~40 bps. Management also flagged tariff policy risk for price‑sensitive consumer end‑markets, though smartphone inventories look healthy (no “prebuild” concern).

Why AI/Semiconductor Investors Should Care

TSMC remains the gatekeeper of AI compute: 74% of revenue now comes from ≤7nm, and N2/A16 extend its lead as packaging scales in the U.S. The company narrowed 2025 CapEx to $40–$42B—a tell that multi‑year AI demand stays robust—while preserving ~60% gross margins. Key watch‑items are packaging capacity and tariff/FX swings; if TSMC eases bottlenecks and ramps N2 on schedule, it should continue to capture an outsized share of the AI infrastructure economics.

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Amazon.com, Inc. (NASDAQ: AMZN)

☁️ AWS Outage Rocks the Cloud—And Everyone Else’s Boat, Too

What The Chip: Early Monday morning, October 20, 2025, AWS hit some stormy weather in its core region, US-EAST-1 (N. Virginia), causing a ripple effect felt across millions of users and thousands of businesses worldwide. The disruption began at 12:11 a.m. PT due to issues with DNS resolution for DynamoDB API endpoints, and while AWS claimed victory over the initial problem by 2:24 a.m. PT, lingering issues meant the internet’s Monday blues lasted much longer.

Details:

🕰 Timeline turbulence: Things went sideways at exactly 12:11 a.m. PT, when AWS customers began seeing errors spike across numerous services. AWS quickly traced it back to a DNS resolution glitch affecting DynamoDB endpoints. By 2:24 a.m. PT, the initial DNS snafu was resolved—but recovering fully proved trickier, causing service hiccups into the afternoon.

🛠 Why was the recovery so messy? Turns out, the DNS issue cascaded downstream into deeper AWS infrastructure layers, specifically affecting Lambda invocation, Network Load Balancer (NLB) health checks, and internal networking for EC2 instances. Basically, AWS’s internal plumbing sprang leaks all over the place.

🌊 Ripple effects were massive: The list of impacted services reads like the guest list to a tech celebrity party—Snapchat, Roblox, Fortnite, Coinbase, Robinhood, Lyft, Venmo, and even Amazon’s very own Prime Video, Alexa, Ring, and the retail website. The widespread nature of this outage highlights just how interconnected—and fragile—today’s digital world really is.

📈 Just how big? Huge. Reports ranged widely, with 6.5 million to 11 million user outage reports across 1,000 to 2,500 companies, making it the biggest cloud meltdown since the infamous CrowdStrike incident of 2024. If outages had their own Richter scale, this one would be pushing seismic.

🚨 Not a cyberattack (this time): AWS quickly ruled out a malicious breach or cyberattack. This outage was purely an inside job—a technical fault that triggered a domino effect through AWS’s massive service ecosystem.

🌍 The gravitational pull of US-EAST-1: AWS’s oldest and largest data-center cluster, located in Northern Virginia, remains the backbone for countless global operations. It’s the “New York City” of cloud computing—everything passes through it, and when it sneezes, the whole internet catches a cold.

🏛 Regulators are watching closely: UK financial authorities quickly raised eyebrows and renewed their calls to treat AWS as a “critical third-party provider,” potentially increasing regulatory scrutiny and oversight. In other words, AWS might soon feel the heat of being deemed “too big to fail.”

Why AI/Semiconductor Investors Should Care

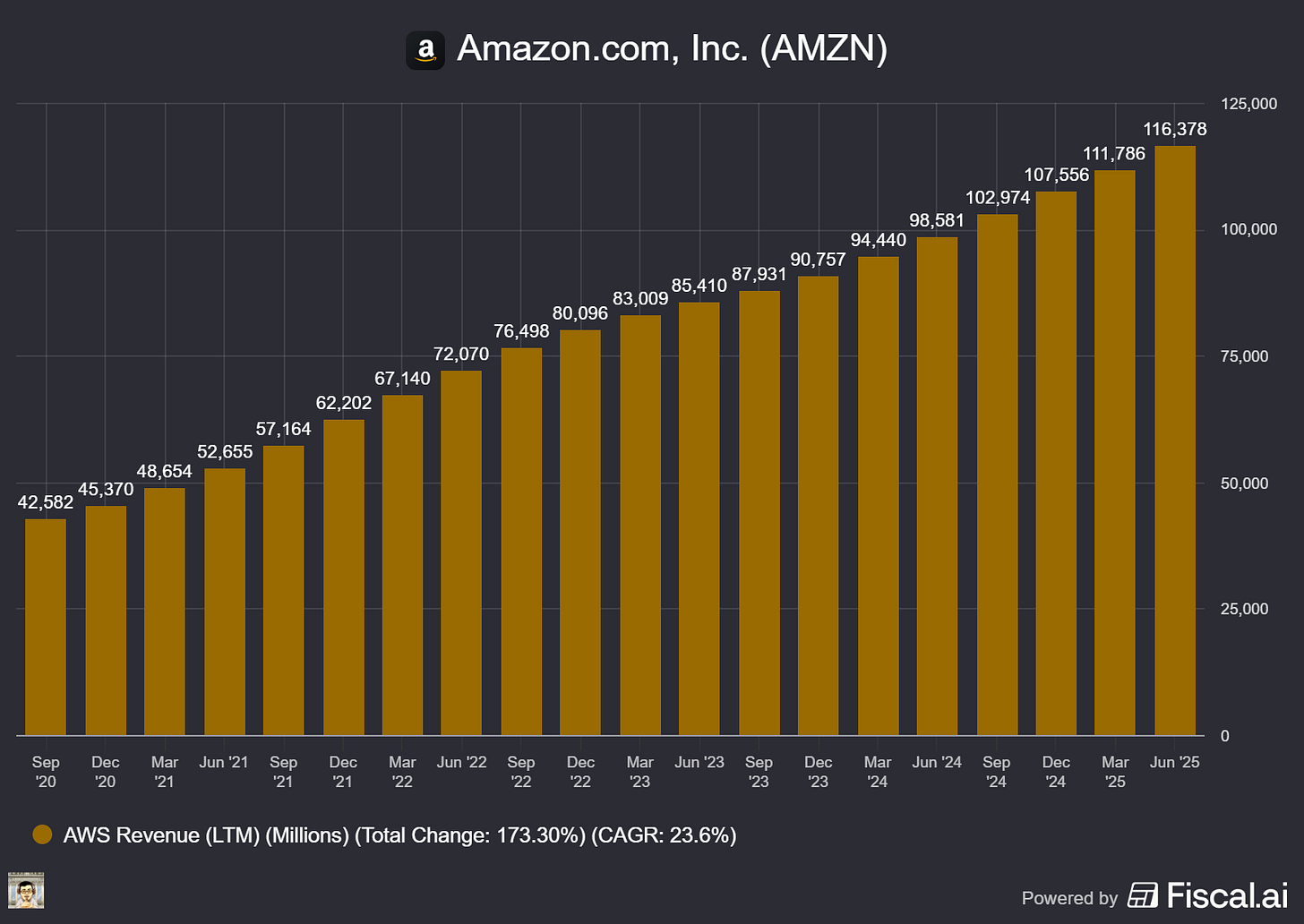

This event is a wake-up call for investors in AI-driven companies heavily reliant on cloud infrastructure, especially those married exclusively to AWS via platforms like Amazon Bedrock (hello, Anthropic and its Claude models). The outage underscores the critical importance of investing in multi-cloud, multi-region capabilities—companies able to rapidly reroute workloads between different providers or regions will command a resilience premium.

Alphabet (NASDAQ: GOOGL)

⚡️ Google Cloud Powers Up with NVIDIA’s Blackwell GPUs—Say Hello to G4!

What The Chip: On October 20–21, 2025, Google Cloud officially flipped the switch on its newest virtual machines—G4 VMs, turbocharged by NVIDIA’s RTX PRO 6000 Blackwell GPUs. Designed to crush high-throughput AI inference, fine-tuning, visual computing, and real-time simulations (think Omniverse and robotics).

Details:

🎯 Blackwell Arrives in the Cloud: Every G4 VM packs NVIDIA’s RTX PRO 6000 Blackwell GPUs, each boasting 96 GB of ultra-fast GDDR7 memory, 5th-gen Tensor Cores (featuring FP4 precision for optimized inference), and 4th-gen RT cores for buttery-smooth ray-traced visuals. Choose your power level from 1, 2, 4, or even 8 GPUs per VM—with fractional GPUs coming soon for cost-savvy customers.

🚦 Fast Lane for Multi-GPU Performance: Google didn’t just throw GPUs together; they built a software-defined PCIe peer-to-peer fabric that supercharges multi-GPU teamwork. Collective operations like All-Reduce and All-Gather see performance boosts up to 2.2× faster. Tensor-parallel inference gets a sweet 168% throughput jump with latency dropping by 41%.

🏎️ The Leap from G2 is No Joke: Compared to the previous-gen G2 (L4 GPUs), G4 VMs deliver a staggering 9× throughput increase. Positioned as the sweet spot, G4 fills the performance gap perfectly between the budget-friendly G2 series and heavyweight A-series training monsters.

🧰 Built on Solid Ground (and Fast Disks!): G4 VMs run atop AMD’s latest-and-greatest EPYC Turin (5th Gen) CPUs. One twist—Google replaced traditional Persistent Disks with Hyperdisk for turbocharged I/O performance, and you can pile on Titanium local SSDs up to 12 TB.

🌌 Omniverse & Isaac at Your Fingertips: The cherry on top—NVIDIA’s flagship simulation platforms, Omniverse and Isaac Sim, are now ready for one-click deployment via the Google Cloud Marketplace.

🎬 Graphics and Media, Upgraded: G4 isn’t just for inference—it’s ready to roll as a virtual workstation powerhouse, fully supporting NVIDIA’s RTX virtual workstation (RTX vWS) stack for stunning graphics, real-time rendering, and high-quality media encoding.

💬 Customer Cheers Already Pouring In: Creative giants like WPP call Omniverse on G4 “a true engine for our creative transformation,” while simulation experts at Altair praise G4 for handling their “most demanding fluid dynamics workloads.”

Why AI/Semiconductor Investors Should Care

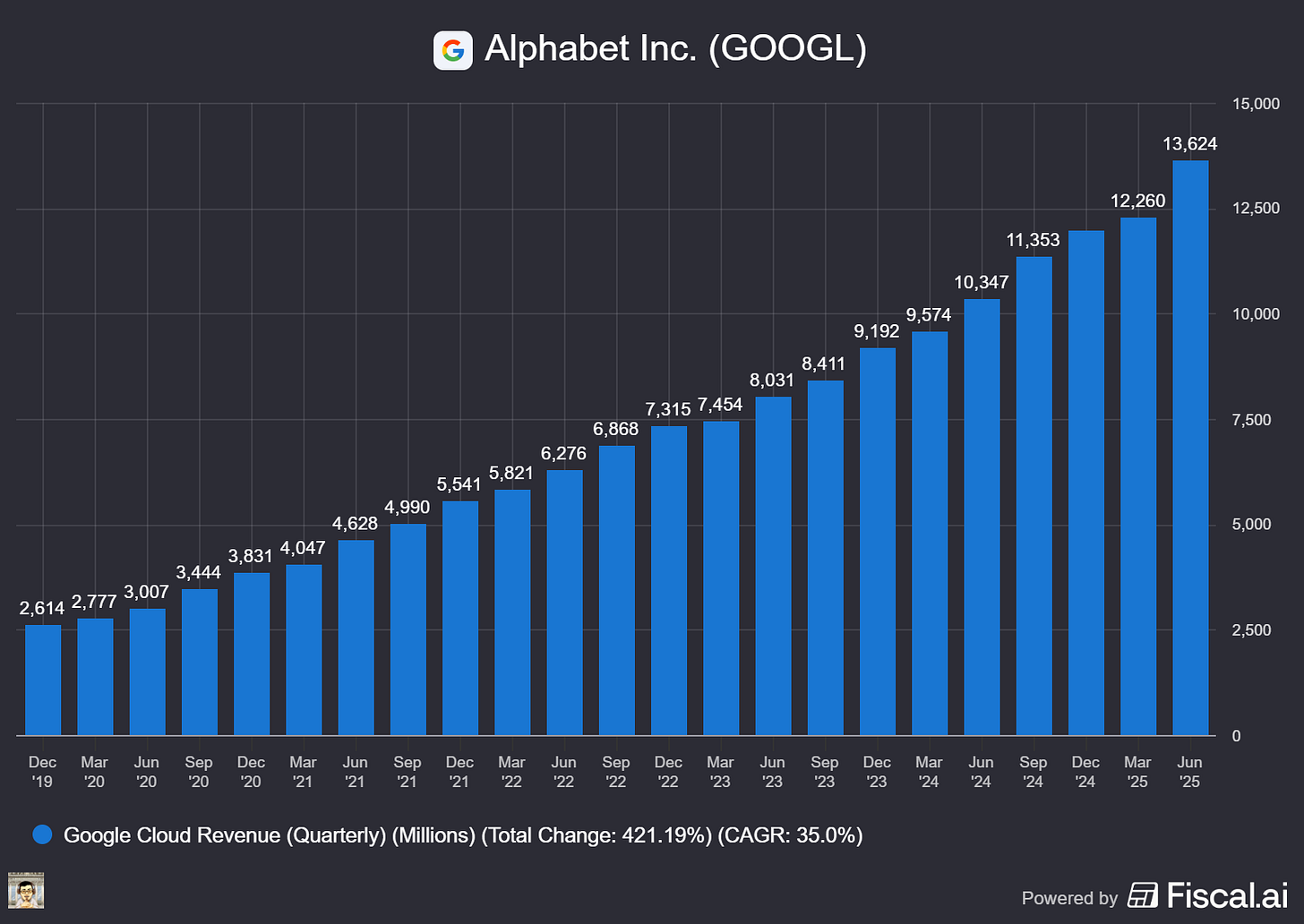

The arrival of G4 VMs signals Google’s serious bet on AI inference and visual computing, precisely where market demand is surging. Google’s unique, built-in P2P GPU fabric boosts real-world performance metrics—throughput and latency—which are critical for enterprise-scale deployments. For NVIDIA investors, this launch expands Blackwell GPUs far beyond traditional workstations, deepening NVIDIA’s cloud footprint and boosting adoption of Omniverse and Isaac ecosystems. Investors should keep eyes on availability, pricing, and potential tariff headwinds—but overall, G4 is perfectly timed to ride the wave of AI’s next big growth spurt.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] TSMC’s Q3 2025: Record Profits Powered by AI and Advanced Chips

Event date: October 16, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

If you’ve been wondering just how much the world loves AI, TSMC’s latest results might answer that clearly. Taiwan Semiconductor Manufacturing Company (TSMC), the world’s most influential chipmaker, has just reported another blockbuster quarter, thanks to surging demand for cutting-edge chips.

In the third quarter, TSMC posted revenue of NT$989.92 billion (around US$33.10 billion), jumping nearly 41% from last year. Net income soared to NT$452.30 billion, lifting earnings per share (EPS) to NT$17.44 (US$2.92 per ADR). In short: business is booming, and the AI wave is carrying TSMC higher.

AI remains the superstar driving TSMC’s growth. CEO Dr. C.C. Wei highlighted that demand for AI-related technology isn’t just strong—it’s accelerating. Companies are racing to deploy new AI capabilities, and TSMC is perfectly positioned to supply the crucial advanced chips.

TSMC isn’t just talking to chipmakers these days; they’re directly engaging with their customers’ customers. Everyone from enterprise tech giants to governments focused on sovereign AI is knocking at TSMC’s door, securing their spot in line for these indispensable semiconductors.